Note

Go to the end to download the full example code.

RAG Agent#

This notebook takes inspiration from Pydantic-AI RAG tutorial and simplifies its approach.

Context#

Our objective is to create an assistant that answers questions about Logfire (developed by Pydantic) using the project’s documentation as a knowledge base. We make this RAG agentic by providing a retrieve function as a tool for the LLM.

flowchart TD

A(User) -->|Ask a question| B(Agent)

B --> |Messages + retrieve tool|C{LLM}

C --> |Text or Tool Calling response|B

B --> |Vectorize user query |D(Knowledge base)

D --> |Return documents closest to query|B

B --> |Return answer|A

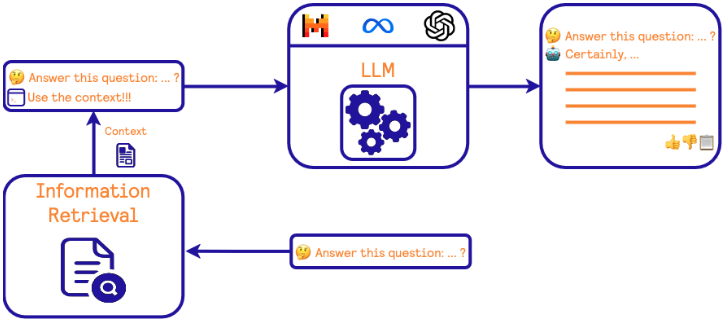

In this system, the LLM has access to the “retrieve” tool, which it may or may not invoke in its response. If invoked, the tool call is parsed by the LLM client and returned as a structured response to the agent, which executes the requested function.

This differs from workflow-based RAG, where the retrieved function is always executed before calling the LLM. In workflow-based RAG, the LLM prompt is a concatenation of the initial prompt and the retrieved content. For more detailed information, we recommend exploring Ragger-Duck, a RAG implementation developed by scikit-learn core developers.

A RAG workflow (source: Ragger-Duck)#

RAG Workflow vs Agent-Based RAG#

We revisit the “workflow vs agent-based systems” tradeoff mentioned in the previous notebook. This assistant use-case requires a high degree of flexibility, as user queries are arbitrary. To reduce costs and latency, we only query the knowledge base when necessary based on user input.

Implementation#

Design choices#

We simplify the original pydantic tutorial with the following optimizations:

No vector database:

For small knowledge bases, overall latency can be reduced by keeping the data in memory using a dataframe or a numpy array, persisted with diskcache.

Approximate nearest neighbors (ANN) operations, typically provided by a vector database, can be replaced by a simple Nearest Neighbors estimator from scikit-learn.

Batch vectorization:

The content of the knowledge base is vectorized in a single batch rather than looping through each element individually.

Local vectorization:

To reduce API costs, we vectorize content locally by downloading a text vectorizer from HuggingFace. This is achieved using

skrub, which wraps thesentence-transformerslibrary to provide a scikit-learn-compatible transformer. No GPU is required.

Overall, this approach is faster to execute while remaining scalable for reasonably sized knowledge bases, making it more efficient than the original Pydantic tutorial.

Building the knowledge base#

We begin by fetching the Logfire documentation archived online. Next, we use skrub’s dataframe visualization to display long text more efficiently (click on any cell to view its text content).

import pandas as pd

import requests

from skrub import patch_display

# Replace pandas' dataframe visualisation with skrub's

patch_display()

DOCS_JSON = (

'https://gist.githubusercontent.com/'

'samuelcolvin/4b5bb9bb163b1122ff17e29e48c10992/raw/'

'80c5925c42f1442c24963aaf5eb1a324d47afe95/logfire_docs.json'

)

doc_pages = pd.DataFrame(

requests.get(DOCS_JSON).json()

)

doc_pages

We now use a text embedding model to get a vectorized representation of each page of our knowledge base. We can choose among various type of vectorizer:

Type |

Example |

Advantages |

Caveats |

|---|---|---|---|

Ngram-based |

BM25, TF-IDF, LSA, MinHash |

Fast and cheap to train. Good baselines. |

Lack flexibility, corpus dependent. |

Pre-trained text encoder, open weight |

BERT, e5-v2, any model on sentence-transformers |

More powerful embedding representations, local inference. |

Requires installing pytorch and extra dependencies. |

Pre-trained text encoder, commercial API |

open-ai text-embedding-3-small |

Most powerful representations, using techniques like Matryoshka representation learning (also available on sentence-transformers). Easy API integration. |

Inference costs, reliance on a third party, closed weights, batch size < 2048. |

For this example, we choose the second option, as it reduces inference cost. skrub’s TextEncoder downloads the specified model locally using sentence-transformers, transformers and pytorch before generating the embeddings for the knowledge base.

from skrub import TextEncoder

text_encoder = TextEncoder(

model_name="sentence-transformers/paraphrase-albert-small-v2",

n_components=None,

)

embeddings = text_encoder.fit_transform(doc_pages["content"])

embeddings.shape

(299, 768)

Next, we use scikit-learn NearestNeighbors to perform exact retrieval. Note that

this operation has a time complexity of \(O(d \times N)\), where \(d\) is

the dimensionality of our embedding vectors, and \(N\) the number of elements

to scan. For larger knowledge bases, using Approximate Nearest Neighbors, with

techniques like HNSW (implemented by faiss) or random

projections (implemented by Annoy)

is recommended, as these reduce the retrieval time complexity to

\(O(d \times log(N))\).

We return the indices of the 8 closest match and their distances for two queries: one related query to our knowledge base topic (Logfire) and another one unrelated (cooking).

from sklearn.neighbors import NearestNeighbors

nn = NearestNeighbors(n_neighbors=8).fit(embeddings)

query_embedding = text_encoder.transform(

pd.Series([

"How do I configure logfire to work with FastAPI?",

"I'm a chef, explain how to bake Lasagnas.",

])

)

distances, indices = nn.kneighbors(query_embedding, return_distance=True)

print(distances[0])

doc_pages.iloc[indices[0]]

[12.45328426 13.05936241 13.30593491 13.38497925 13.43500328 13.65298176

13.69662762 13.72200966]

We observe that we successfully retrieved content related to FastAPI in the documentation. What are the results for the unrelated query?

print(distances[1])

doc_pages.iloc[indices[1]]

[15.43010139 15.45553875 15.55194283 15.60620213 15.61275005 15.61703777

15.67152786 15.82261467]

For the second query, we can hardly discern a link between the retrieved items and the original question. However, notice that their distances are higher compared to the first query. This means that no article closely match the second query.

The average distances between the first and second queries are quite similar, though. This issue is commonly referred as the curse of dimensionality, where items in high-dimensional spaces tends to all appear “far” from each other due to the hyper-volume growing exponentially with the number of dimensions. Real-world implementations require a careful evaluation of retrieval system performance, which we skip here.

A possible filtering method would be to set a radius, i.e., a maximum distance beyond which retrieved elements are discarded. As shown below, the second query results in an empty set, as all euclidean distances exceed 14.

nn.radius_neighbors(

query_embedding,

radius=14,

return_distance=True,

sort_results=True,

)

(array([array([12.45328426, 13.05936241, 13.30593491, 13.38497925, 13.43500328,

13.65298176, 13.69662762, 13.72200966, 13.92671013, 13.94815063,

13.99003315]) ,

array([], dtype=float64)], dtype=object), array([array([ 8, 111, 113, 15, 112, 13, 35, 114, 67, 94, 33]),

array([], dtype=int64)], dtype=object))

We can emulate persistence on disk using diskcache. Originally designed as a fast key-value storage solution for Django, it can also be applied in our agentic context. Here, we serialize the knowledge base content, our text encoder, and the fitted nearest neighbors estimator.

import diskcache as dc

cache = dc.Cache('tmp')

cache["doc_pages"] = doc_pages

cache["text_encoder"] = text_encoder

cache["nn"] = nn

Defining the Agent#

We defined our pydantic-ai Agent with its retrieve function set as a tool.

Notice how pydantic-ai enables you to specify a schema for the dependency Deps,

which is used as a RunContext during tool execution.

For this example, we use OpenAI GPT-4o-mini rather than Llama3.3-70B with Groq’s free tier as Groq currently struggles with tool calling.

import logfire

from dotenv import load_dotenv

from dataclasses import dataclass

from pydantic_ai import RunContext

from pydantic_ai.agent import Agent

import nest_asyncio

# Load the 'OPENAI_API_KEY' variable environment from a source file.

load_dotenv()

# Enable nested event loop in jupyter notebooks to run pydantic-ai

# asynchronous coroutines.

nest_asyncio.apply()

# Some boilerplate around logging.

logfire.configure(scrubbing=False)

@dataclass

class Deps:

text_encoder: TextEncoder

nn: NearestNeighbors

doc_pages: pd.DataFrame

system_prompt = (

"You are a documentation assistant. Your objective is to answer user questions "

"by retrieving the right articles in the documentation. "

"Don't look-up the documentation if the question is unrelated to LogFire "

"or Pydantic. "

)

agent = Agent(

'openai:gpt-4o-mini',

system_prompt=system_prompt,

deps_type=Deps,

)

def make_prompt(pages):

return "\n\n".join(

(

"# " + pages["title"]

+ "\nDocumentation URL:" + pages["path"]

+ "\n\n" + pages["content"] + "\n"

).tolist()

)

@agent.tool

async def retrieve(context: RunContext[Deps], search_query: str) -> str:

"""Retrieve documentation sections based on a search query.

Args:

context: The call context.

search_query: The search query.

"""

with logfire.span(f'create embedding for {search_query=}'):

query_embedding = context.deps.text_encoder.transform(

pd.Series([search_query]),

)

indices = (

context.deps.nn.kneighbors(query_embedding, return_distance=False)

.squeeze()

)

pages = context.deps.doc_pages.iloc[indices]

doc_retrieved = make_prompt(pages)

print(doc_retrieved)

return doc_retrieved

Finally, we define our main coroutine entry point to run the agent.

async def run_agent(question: str):

"""

Entry point to run the agent and perform RAG based question answering.

"""

logfire.info(f'Asking "{question}"')

cache = dc.Cache('tmp')

deps = Deps(

text_encoder=cache["text_encoder"],

nn=cache["nn"],

doc_pages=cache["doc_pages"],

)

return await agent.run(question, deps=deps)

Results#

We are now ready run our system! Logfire will generate logs for the different steps to help us observe the different internal steps.

import asyncio

answer = asyncio.run(

run_agent("Can you summarize the roadmap for Logfire?")

)

18:22:17.971 Asking "Can you summarize the roadmap for Logfire?"

18:22:17.974 agent run prompt=Can you summarize the roadmap for Logfire?

18:22:17.974 preparing model and tools run_step=1

18:22:17.975 model request

Logfire project URL:

https://logfire.pydantic.dev/vincent-maladiere/testing-pydantic

18:22:18.963 handle model response

18:22:18.965 running tools=['retrieve']

18:22:18.966 create embedding for search_query='LogFire roadmap'

# Roadmap

Documentation URL:roadmap.md

Here is the roadmap for **Pydantic Logfire**. This is a living document, and it will be updated as we progress.

If you have any questions, or a feature request, **please join our [Slack][slack]**.

# Features 💡

Documentation URL:roadmap.md

There are a lot of features that we are planning to implement in Logfire. Here are some of them.

# Language Support

Documentation URL:roadmap.md

Logfire is built on top of OpenTelemetry, which means that it supports all the languages that OpenTelemetry supports.

Still, we are planning to create custom SDKs for JavaScript, TypeScript, and Rust, and make sure that the

attributes are displayed in a nice way in the Logfire UI — as they are for Python.

For now, you can check our [Alternative Clients](guides/advanced/alternative-clients.md) section to see how

you can send data to Logfire from other languages.

See [this GitHub issue][language-support-gh-issue] for more information.

# Next steps

Documentation URL:index.md

Ready to keep going?

- Read about [Tracing with Spans](get-started/traces.md)

- Complete the [Onboarding Checklist](guides/onboarding-checklist/index.md)

More topics to explore

- Logfire's real power comes from [integrations with many popular libraries](integrations/index.md)

- As well as spans, you can [use Logfire to record metrics](guides/onboarding-checklist/add-metrics.md)

- Logfire doesn't just work with Python, [read more about Language support](https://opentelemetry.io/docs/languages/){:target="_blank"}

# Pydantic Logfire

Documentation URL:index.md

From the team behind Pydantic, Logfire is a new type of observability platform built on the same belief as our open source library — that the most powerful tools can be easy to use.

Logfire is built on OpenTelemetry, and supports monitoring your application from any language, with particularly great support for Python! [Read more](why-logfire/index.md).

# or

Documentation URL:integrations/psycopg.md

logfire.instrument_psycopg('psycopg')

# Usage

Documentation URL:integrations/system-metrics.md

```py

import logfire

logfire.configure()

logfire.instrument_system_metrics()

```

Then in your project, click on 'Dashboards' in the top bar, click 'New Dashboard', and select 'Basic System Metrics (Logfire)' from the dropdown.

# To instrument the whole module:

Documentation URL:integrations/psycopg.md

logfire.instrument_psycopg(psycopg)

18:22:20.000 preparing model and tools run_step=2

18:22:20.000 model request

18:22:22.924 handle model response

Let’s now display the final response:

print(answer.data)

The roadmap for Logfire is a living document that outlines planned features and development directions. Here are the key points:

1. **Features**: Logfire is set to include various features aimed at enhancing logging and observability. Specific details are available in the documentation.

2. **Language Support**:

- Logfire is built on OpenTelemetry, supporting all languages compatible with it.

- Custom SDKs are planned for JavaScript, TypeScript, and Rust to ensure attributes are well-represented in the Logfire UI, similar to Python.

- More information can be found in the Alternative Clients section.

3. **Next Steps**: Users are encouraged to explore topics such as:

- Tracing with spans

- Completing the onboarding checklist

- Integrations with popular libraries

- Recording metrics

For ongoing updates and more detailed information, you can refer to the [full roadmap documentation](roadmap.md).

The display below shows the sequence of messages from top to bottom (most recent).

The LLM correctly responded to our first query by calling a retrieval tool.

After retrieving the content queried by the LLM, we make another call with this content.

Finally, the LLM sends back its text response, completing the message loop.

import json

from pprint import pprint

pprint(

json.loads(answer.all_messages_json())

)

[{'kind': 'request',

'parts': [{'content': 'You are a documentation assistant. Your objective is '

'to answer user questions by retrieving the right '

"articles in the documentation. Don't look-up the "

'documentation if the question is unrelated to LogFire '

'or Pydantic. ',

'part_kind': 'system-prompt'},

{'content': 'Can you summarize the roadmap for Logfire?',

'part_kind': 'user-prompt',

'timestamp': '2025-01-05T18:22:17.974732Z'}]},

{'kind': 'response',

'parts': [{'args': {'args_json': '{"search_query":"LogFire roadmap"}'},

'part_kind': 'tool-call',

'tool_call_id': 'call_EMpVwW5Z89eoIa8UBb5LJfox',

'tool_name': 'retrieve'}],

'timestamp': '2025-01-05T18:22:18Z'},

{'kind': 'request',

'parts': [{'content': '# Roadmap\n'

'Documentation URL:roadmap.md\n'

'\n'

'Here is the roadmap for **Pydantic Logfire**. This is '

'a living document, and it will be updated as we '

'progress.\n'

'\n'

'If you have any questions, or a feature request, '

'**please join our [Slack][slack]**.\n'

'\n'

'\n'

'# Features 💡\n'

'Documentation URL:roadmap.md\n'

'\n'

'There are a lot of features that we are planning to '

'implement in Logfire. Here are some of them.\n'

'\n'

'\n'

'# Language Support\n'

'Documentation URL:roadmap.md\n'

'\n'

'Logfire is built on top of OpenTelemetry, which means '

'that it supports all the languages that OpenTelemetry '

'supports.\n'

'\n'

'Still, we are planning to create custom SDKs for '

'JavaScript, TypeScript, and Rust, and make sure that '

'the\n'

'attributes are displayed in a nice way in the Logfire '

'UI — as they are for Python.\n'

'\n'

'For now, you can check our [Alternative '

'Clients](guides/advanced/alternative-clients.md) '

'section to see how\n'

'you can send data to Logfire from other languages.\n'

'\n'

'See [this GitHub issue][language-support-gh-issue] '

'for more information.\n'

'\n'

'\n'

'# Next steps\n'

'Documentation URL:index.md\n'

'\n'

'Ready to keep going?\n'

'\n'

'- Read about [Tracing with '

'Spans](get-started/traces.md)\n'

'- Complete the [Onboarding '

'Checklist](guides/onboarding-checklist/index.md)\n'

'\n'

'More topics to explore\n'

'\n'

"- Logfire's real power comes from [integrations with "

'many popular libraries](integrations/index.md)\n'

'- As well as spans, you can [use Logfire to record '

'metrics](guides/onboarding-checklist/add-metrics.md)\n'

"- Logfire doesn't just work with Python, [read more "

'about Language '

'support](https://opentelemetry.io/docs/languages/){:target="_blank"}\n'

'\n'

'\n'

'# Pydantic Logfire\n'

'Documentation URL:index.md\n'

'\n'

'From the team behind Pydantic, Logfire is a new type '

'of observability platform built on the same belief as '

'our open source library — that the most powerful '

'tools can be easy to use.\n'

'\n'

'Logfire is built on OpenTelemetry, and supports '

'monitoring your application from any language, with '

'particularly great support for Python! [Read '

'more](why-logfire/index.md).\n'

'\n'

'\n'

'# or\n'

'Documentation URL:integrations/psycopg.md\n'

'\n'

"logfire.instrument_psycopg('psycopg')\n"

'\n'

'\n'

'# Usage\n'

'Documentation URL:integrations/system-metrics.md\n'

'\n'

'```py\n'

'import logfire\n'

'\n'

'logfire.configure()\n'

'\n'

'logfire.instrument_system_metrics()\n'

'```\n'

'\n'

"Then in your project, click on 'Dashboards' in the "

"top bar, click 'New Dashboard', and select 'Basic "

"System Metrics (Logfire)' from the dropdown.\n"

'\n'

'\n'

'# To instrument the whole module:\n'

'Documentation URL:integrations/psycopg.md\n'

'\n'

'logfire.instrument_psycopg(psycopg)\n',

'part_kind': 'tool-return',

'timestamp': '2025-01-05T18:22:19.999467Z',

'tool_call_id': 'call_EMpVwW5Z89eoIa8UBb5LJfox',

'tool_name': 'retrieve'}]},

{'kind': 'response',

'parts': [{'content': 'The roadmap for Logfire is a living document that '

'outlines planned features and development directions. '

'Here are the key points:\n'

'\n'

'1. **Features**: Logfire is set to include various '

'features aimed at enhancing logging and '

'observability. Specific details are available in the '

'documentation.\n'

'\n'

'2. **Language Support**:\n'

' - Logfire is built on OpenTelemetry, supporting '

'all languages compatible with it.\n'

' - Custom SDKs are planned for JavaScript, '

'TypeScript, and Rust to ensure attributes are '

'well-represented in the Logfire UI, similar to '

'Python.\n'

' - More information can be found in the Alternative '

'Clients section.\n'

'\n'

'3. **Next Steps**: Users are encouraged to explore '

'topics such as:\n'

' - Tracing with spans\n'

' - Completing the onboarding checklist\n'

' - Integrations with popular libraries\n'

' - Recording metrics\n'

'\n'

'For ongoing updates and more detailed information, '

'you can refer to the [full roadmap '

'documentation](roadmap.md).',

'part_kind': 'text'}],

'timestamp': '2025-01-05T18:22:20Z'}]

Let’s now observe the agent behavior for an unrelated query.

unrelated_answer = asyncio.run(

run_agent("I'm a chef, explain how to bake a delicious brownie?")

)

18:22:22.930 Asking "I'm a chef, explain how to bake a delicious brownie?"

18:22:22.932 agent run prompt=I'm a chef, explain how to bake a delicious brownie?

18:22:22.933 preparing model and tools run_step=1

18:22:22.933 model request

18:22:23.746 handle model response

Since we specified in the agent system prompt not to perform a retrieval operation for unrelated questions, the agent responds with a plain text message indicating its inability to answer.

print(unrelated_answer.data)

I'm sorry, but I can only assist with questions related to LogFire or Pydantic. If you have any questions about these topics, feel free to ask!

As expected, the message loop is smaller since the LLM didn’t invoke the retrieve function, resulting in less latency and lower inference costs.

pprint(

json.loads(unrelated_answer.all_messages_json())

)

[{'kind': 'request',

'parts': [{'content': 'You are a documentation assistant. Your objective is '

'to answer user questions by retrieving the right '

"articles in the documentation. Don't look-up the "

'documentation if the question is unrelated to LogFire '

'or Pydantic. ',

'part_kind': 'system-prompt'},

{'content': "I'm a chef, explain how to bake a delicious brownie?",

'part_kind': 'user-prompt',

'timestamp': '2025-01-05T18:22:22.932962Z'}]},

{'kind': 'response',

'parts': [{'content': "I'm sorry, but I can only assist with questions "

'related to LogFire or Pydantic. If you have any '

'questions about these topics, feel free to ask!',

'part_kind': 'text'}],

'timestamp': '2025-01-05T18:22:23Z'}]

Finally, we cleanup the tmp diskcache folder.

import shutil

shutil.rmtree('tmp')

Total running time of the script: (0 minutes 29.137 seconds)