21.4 Clustering using mixture models

We have seen how the K-means algorithm can be used to cluster data vectors in . However, this method assumes that all clusters have the same spherical shape, which is a very restrictive assumption.

In addition, K-means assumes that all clusters can be described by Gaussian in the input space, so it can’t be applied to categorical data.

We can overcome both of these issues with mixture models.

21.4.1 Mixtures of Gaussians

Recall from section 3.5.1 that a Gaussian mixture model (GMM) has the form:

If we know the model parameters, , we can use the Bayes rule to compute the responsibility (posterior membership probability) of a cluster for the data point :

We can then compute the most probable cluster assignment:

This is known as hard clustering.

21.4.1.1 K-means is a special case of EM

We can estimate the parameter of a GMM using the EM algorithm (section 8.7.3).

It turns out K-means is a special case of this algorithm, which makes two assumptions:

- We fix and for all clusters, so we just have to estimate the means

- We approximate the E step by replacing the soft responsibilities with hard assignment, i.e. we set instead of .

With this approximation, the weighted MLE problem of the M step reduces to equation:

So we recover K-means.

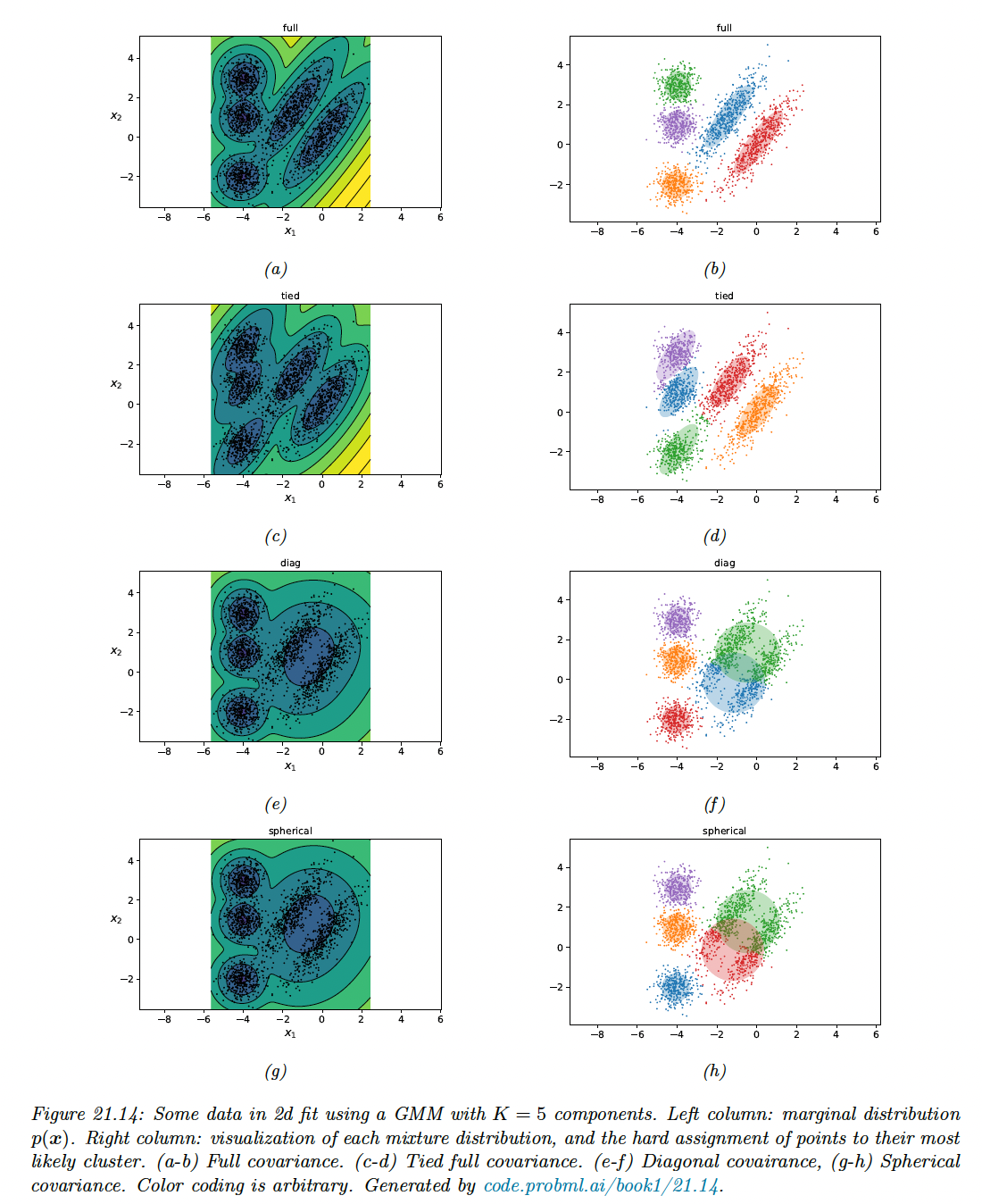

However, the assumption that all cluster have the same spherical shape is very restrictive. The figure below shows the marginal density and clusters induced by different covariance matrix shape.

We see that for this dataset, we need to capture off-diagonal covariance (top row).

21.4.2 Mixtures of Bernoullis

We can use a mixtures of Bernoulli to cluster binary data (as discussed in section 3.5.2). The model has the form:

Here is the probability that bits turns on in cluster .

We can fit this model using EM, SGD or MCMC.