9.3 Naive Bayes classifiers

We discuss a simple generative approach where all features are considered conditionally independent given the class label.

Even if this assumption is not valid, it results in good classifiers. Since the model has only features, it is immune to overfitting.

We use the following class conditional density:

Hence, the posterior is:

where is the prior probability density, and are all the parameters.

9.3.1 Example models

We need to specify the conditional likelihood, given the nature of the features:

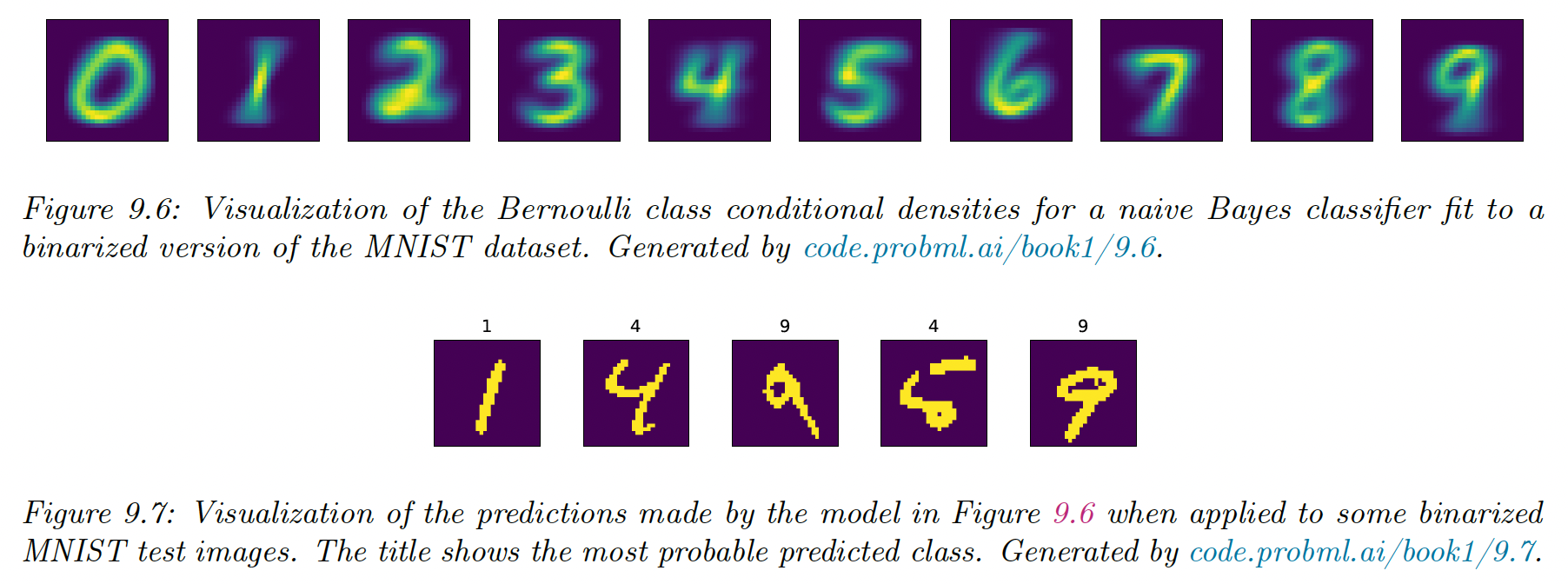

- If is binary, we can use the Bernoulli distribution:

where is the probability that in class .

This is called the Bernoulli multivariate naive model. This approach to MNIST has a surprisingly good 84% accuracy.

- If is categorical, we can use the categorical distribution:

- If , we use the Gaussian distribution:

9.3.2 Model fitting

We can fit the Naive Bayes Classifier using the MLE:

So:

The MLE for the prior is:

The MLE for depends on the class conditional density:

- If is binary, we use the Bernoulli, so:

- If is categorical, we use the Categorical, so:

- If , the Gaussian gives us:

9.3.3 Bayesian Naive Bayes

We now compute the posterior distribution over the parameters. Let's assume we have categorical features, so:

where .

We show in section 4.6.3 that the conjugate is the Dirichlet distribution:

Similarly, we use a Dirichlet distribution for the prior:

We can compute the posterior in close form:

Where and

We derive the posterior predictive distribution as follows.

The prior over the label is given by:

where

For the features, we have:

where

is the posterior mean of the parameters.

If , this reduces to the MLE

If , we add 1 to the empirical count before normalizing. This is called Laplace smoothing.

In the binary, case this gives us:

Once we have estimated the parameter posterior, the predicted distribution over the label is:

This gives us a fully Bayesian form of naive Bayes, in which we have integrated out all the parameters (the posterior mean parameters)

9.3.4 The connection between naive Bayes and Logistic regression

Let , so is a one-hot encoding of feature .

The class conditional density is:

So the posterior over classes is:

which can be written as softmax:

which corresponds to the multinomial logistic regression.

The difference is that the NBC minimizes the join likelihood , whereas the logistic regression minimizes the conditional likelihood