19.1 Data augmentation

Suppose we have a small training set. In some cases, we may be able to create artificially modified versions of the input vectors, which capture the kinds of variation we expect to see at test time, while keeping the original labels.

This is called data augmentation.

19.1.1 Examples

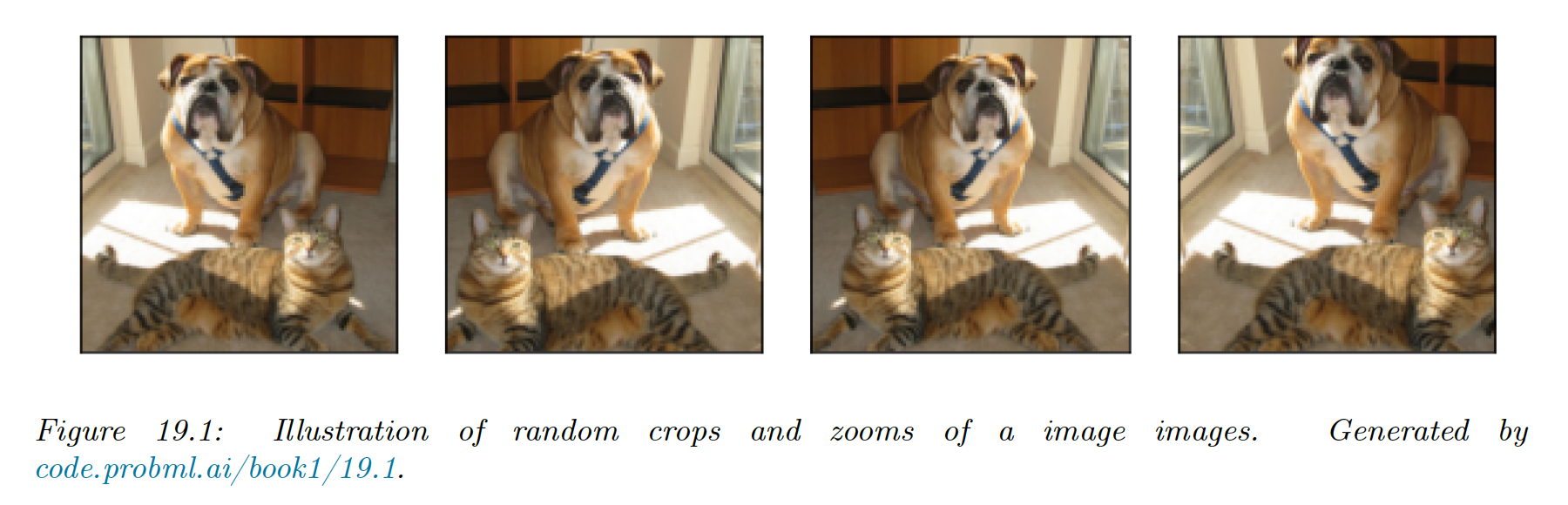

- For image classification tasks, standard data augmentation methods include random crops, zooms and mirror image flips.

- For signal processing, adding background noise

- For text, artificially replace characters or words at random in a text document.

If we afford to train and test the model many times using different version of the data, we can learn which augmentation work best, using blackbox optimization techniques like RL or Bayesian optimization.

We can also learn to combine multiple augmentation together, this is called AutoAugment.

19.1.2 Theoretical justification

Data augmentation generally increases performances (predictive accuracy, robustness), because it algorithmically inject prior knowledge.

To see this, recall the ERM training, where we minimize the risk:

where we approximate by the empirical distribution:

We can think of data augmentation as replacing the empirical distribution with the following algorithmically smoothed distribution:

where is the data augmentation algorithm, which generate a sample from a training point , such that the labeled are unchanged.

A very simple example would be a Gaussian kernel:

This has been called vicinal risk minimization, since we are minimizing the risk in the vicinity of each training point .