20.1 Principal component analysis (PCA)

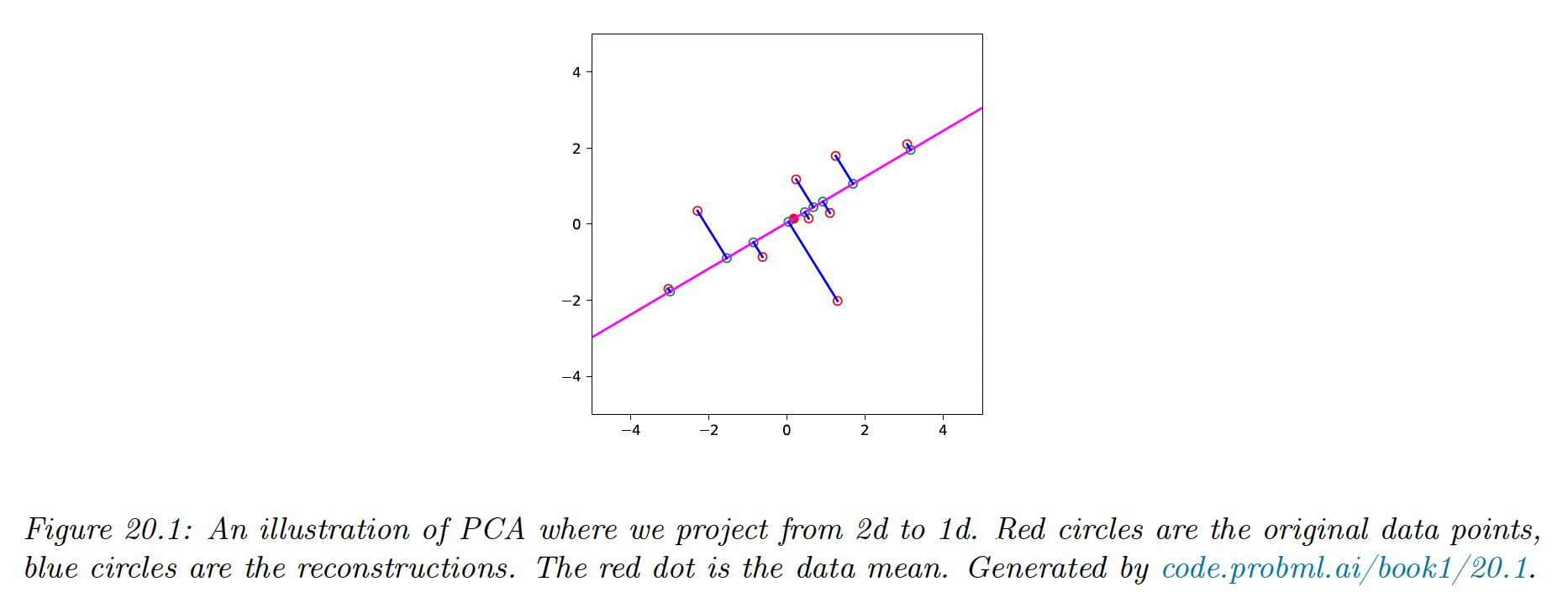

The simplest and most widely used form of dimensionality reduction is PCA. The basic idea is to find a linear and orthogonal projection of the high dimensional data to a low dimensional subspace , such that the low dimensional representation is a “good approximation” to the original data.

More specifically, if we project or encode to get and then unproject or decode to get , then we want and to be close in the distance.

We define the following reconstruction error or distortion:

where the encoder and decoder stages are both linear maps.

We can minimize this objective by setting , where contains the eigenvectors with the largest eigenvalues of the empirical covariance matrix:

where is the centered version of the design matrix.

This is equivalent to maximizing the likelihood of a latent linear Gaussian model known as probabilistic PCA.

20.1.1 Examples

We project below 2d data to a 1d line. This direction captures the most variation in the data.

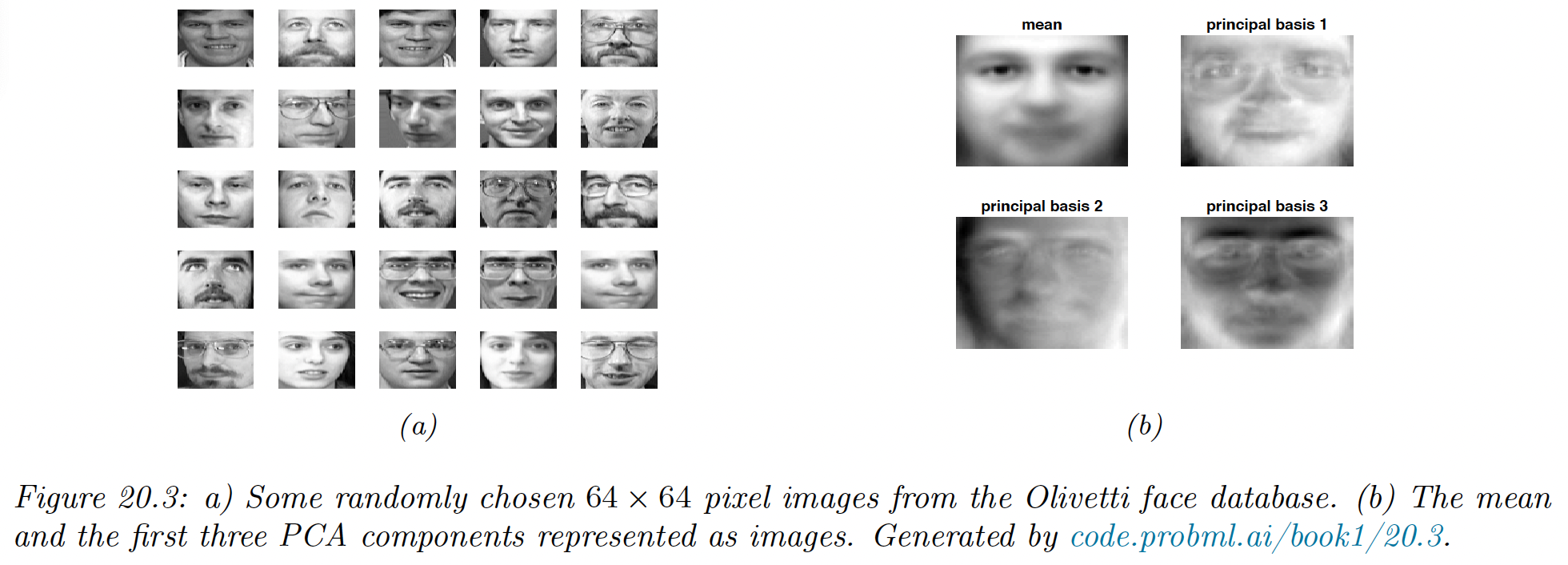

We show PCA applied to the Olivetti face image dataset, which is a set of 64 x 64 grayscale images.

We project these to a 3d subspace and display the basic vectors (the columns of the projection matrix ) known as eigenfaces. We see that the main mode of variation of the data are related to overall lighting and then differences around the eyebrows

If we use enough dimensions (but less than the original 4096) we can use the representation as input to a nearest-neighbor classifier to perform face recognition. This is faster and more reliable than working in the pixel space.

20.1.2 Derivation of the algorithm

Suppose we have an unlabeled dataset where . We can represent this as a data matrix.

We assume , which can be assured by centering the data.

We would like to approximate each by a latent vector . The collection of these latent variable are called the latent factors.

We assume each can be “explained” in terms of a weighed combination of basis functions where each and the weigths are , i.e we assume:

We can measure the reconstruction error produced by this approximation as:

We want to minimize this subject to the constraint that is an orthogonal matrix.

We show below that the optimal solution is obtained by setting where contains the eigenvectors with largest eigenvalues of the empirical covariance matrix.

20.1.2.1 Base case

We start by estimating the best 1d solution . We will find the remaining basis vectors later.

Let the latent coefficient for each data points associated with the first basis vector be

The reconstruction error is given by:

where by the orthonormality assumption.

Taking derivative wrt gives:

So the optimal embedding is obtained by orthogonally projecting the data onto .

Plugging it back to loss gives us:

Where is the empirical covariance matrix (since we assumed the data is centered) and a constant.

We can trivially optimize this by letting , so we impose the constraint and instead optimize:

where is a Lagrange multiplier.

Taking derivatives and equating to zero we have:

Hence, the optimal direction onto which should project the data is an eigenvector of the covariance matrix.

And since we have:

We can minimize the loss in (13) by picking the largest eigenvector which corresponds to the largest eigenvalue.

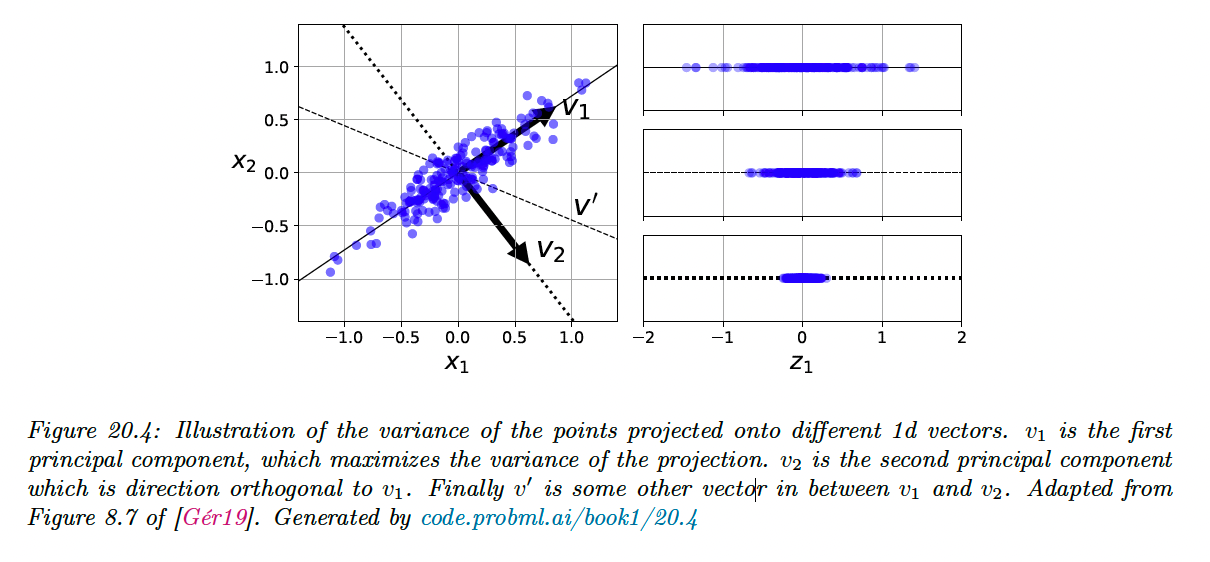

20.1.2.2 Optimal weight vector maximizes the variance of the projected data

Before continuing, we observe that, since the data has been centered:

Hence the variance of the projected data is given by:

From this we see that minimizing the reconstruction error is equivalent to maximizing the variance of the projected data.

This is why it is often said that PCA finds the direction of maximal variance.

20.1.2.3 Induction step

Now let us find another direction to further minimize the reconstruction error, subject to and :

Optimizing wrt and gives the same solution as before.

We can show that yields .

Substituting this solution yields:

Dropping the constant term, plugging the optimal and adding the constraint yields:

The solution is given by the eigenvector with the second largest eigenvalue:

The proof continue that way to show that .

20.1.3 Computational issues

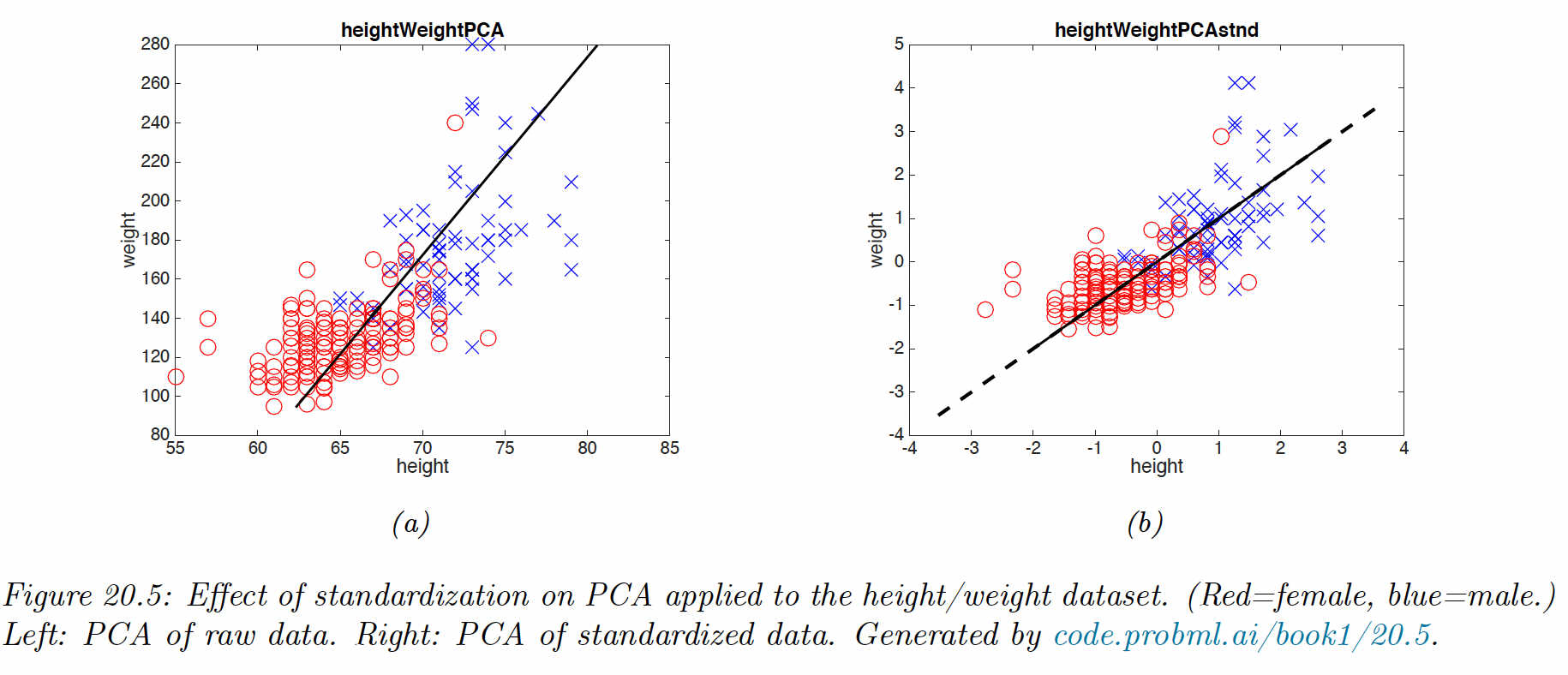

20.1.3.1 Covariance matrix vs Correlation matrix

We have been working with the covariance matrix, but it’s better to work with the correlation matrix because PCA might be “mislead” by the directions in which the variance is higher because of the measurement scale.

20.1.3.2 Dealing with high-dimensional data

We have introduced PCA as finding the eigenvectors of the covariance matrix .

When , it is faster to work with the Gram matrix instead. We show how to use it.

Let be the eigenvectors of with corresponding eigenvalues . By definition, we have:

so by left-multiplying by we get:

from which we see that the eigenvectors of are , with the same eigenvalues .

However, these eigenvectors are not normalized, since:

The normalized eigenvector are given by:

This provides an alternative way to compute the PCA basis, it also allows us to use to kernel trick.

20.1.3.3 Computing PCA using SVD

We now show the equivalence between the PCA computed using eigenvectors and the truncated SVD.

Let be the top engendecomposition of the covariance matrix (assuming is centered).

We know that the optimal estimate of the projection is .

Now let be the -truncated SVD approximation of the matrix .

We know that the right singular vectors of are the eigenvectors of , so .

In addition, the singular values of are related to the eigenvalues of with:

Now suppose we are interested in the principal components, we have:

Finally, if we want to reconstruct the data:

This is precisely the same as truncated SVD approximation.

Thus, we see that we can perform PCA either using a eigendecomposition of or a SVD decomposition of . The latter is often preferable for computational reason.

For very high dimensional problem, we can use a randomized SVD algorithm.

20.1.4 Choosing the number of latent dimensions

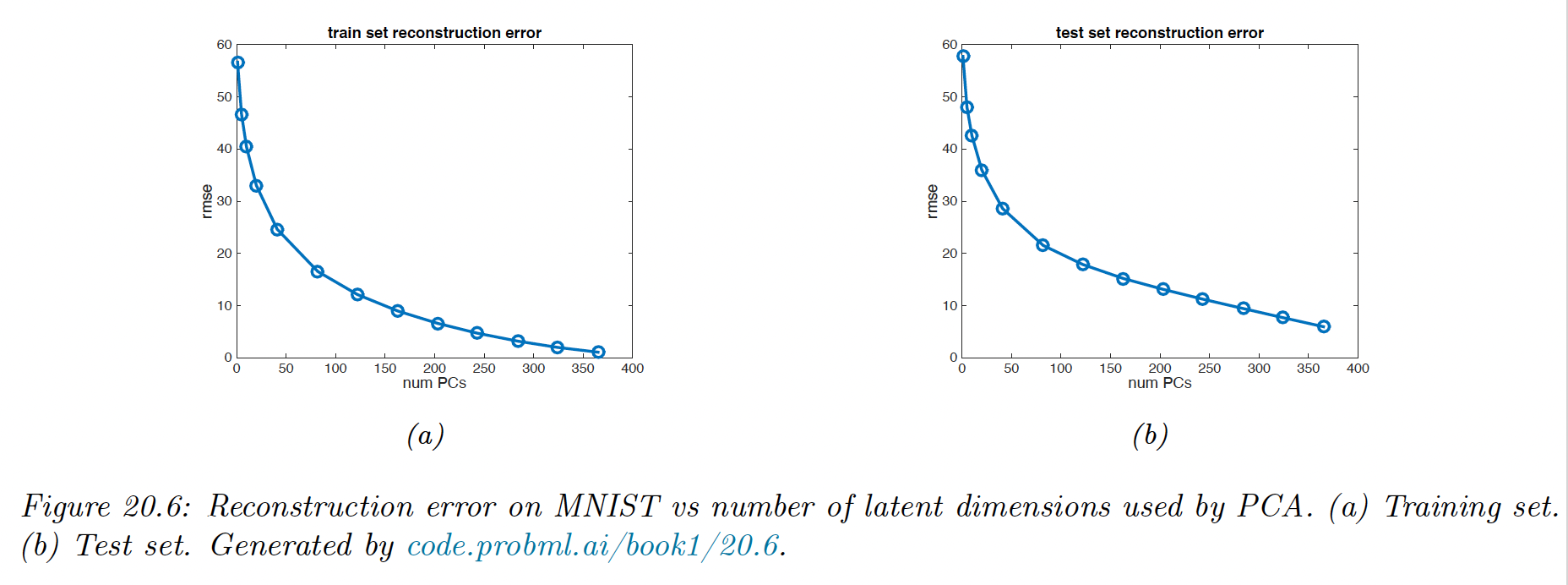

20.1.4.1 Reconstruction error

Let us define the reconstruction error on some dataset when using dimensions:

where the reconstruction is given by and .

We see that it drops quite quickly after, indicating that we capture most of the empirical correlation with a small number of factors.

Of course, if we choose , we get zero reconstruction error on the training set. To avoid overfitting, we compute the reconstruction error on the test set.

Here we see that the error continues to decrease even when the model gets more complex. Thus, we don’t see the typical U-shaped curve that we see in supervised learning.

The problem is that PCA is not a proper generative model of the data: if you give it more latent dimensions, it will approximate the test data more accurately. A similar problem arises if we plot reconstruction error on the test set with K-means clustering.

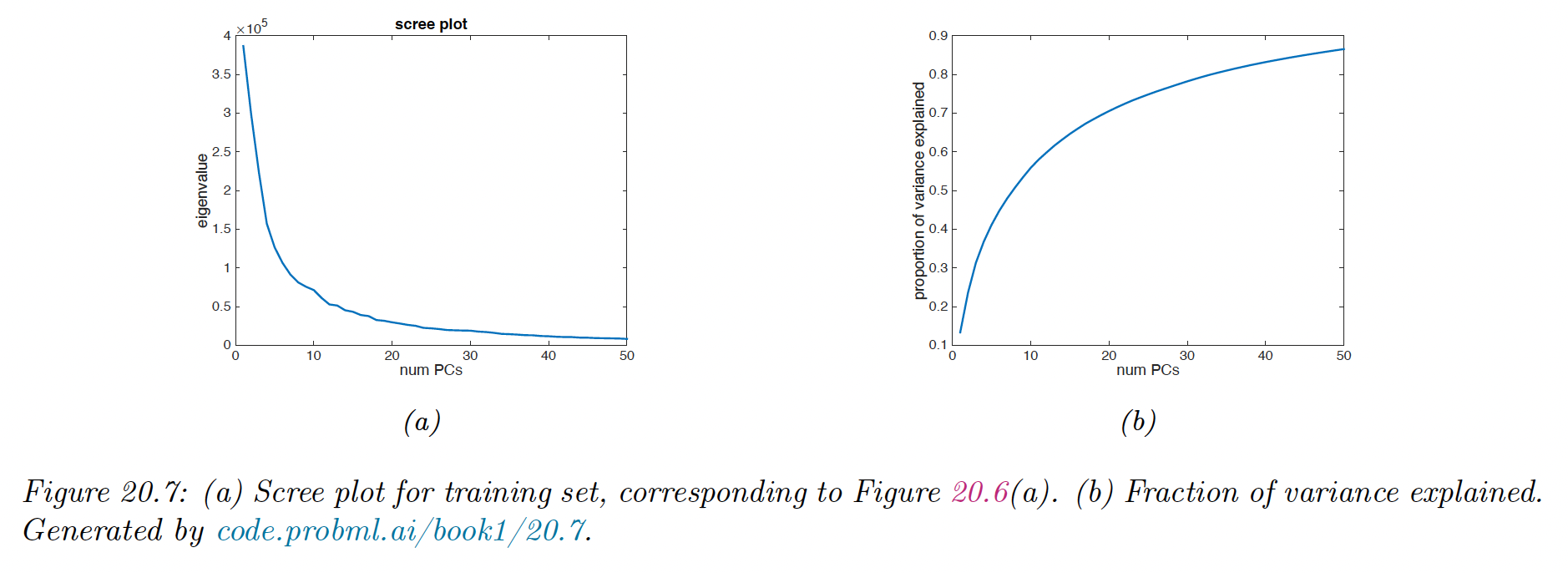

20.1.4.2 Scree plot

A common alternative to plotting reconstruction error vs is to plot the eigenvalues in decreasing order of magnitude.

We can show that:

Thus as the number of dimensions increases, the eigenvalues get smaller and so do the reconstruction error.

An related quantity is the fraction of variance explained:

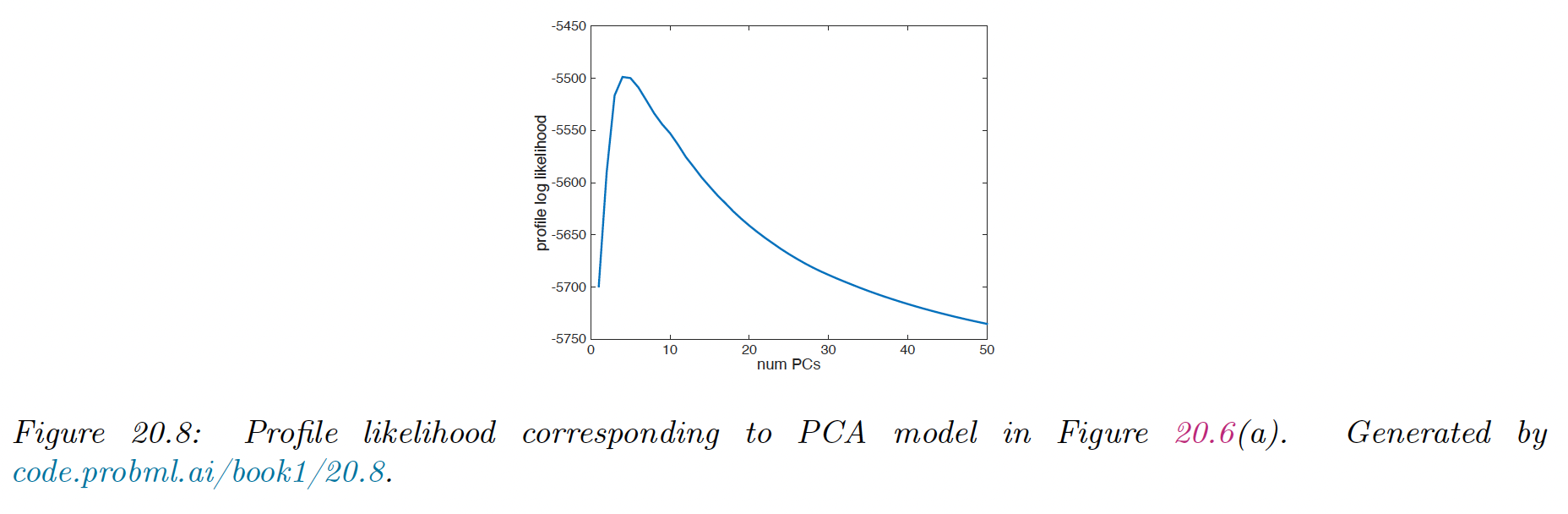

20.1.3.4 Profile likelihood

Although there is no U-shape in the reconstruction error plot, there is sometimes an “elbow” where the error suddenly changes from relatively large to relatively small.

The idea is that, for where is the “true” latent dimensionality, the rate of decrease of errors will be high, whereas for the gains will be smaller since the model is already complex enough to capture the true distribution.

One way to automate the detection of the change of gradient in the curve is to profile likelihood.

Let be some measure of error incurred by the model of size , such that . In PCA these are the eigenvalues but the method can be applied to reconstruction error from K-means clustering.

We partition these values into two group depending on whether or , where is some threshold.

To measure the quality of , we use a changepoint model such that:

It’s important that be the same to avoid overfitting in the case on regime has more data than the other. Within each of the two regimes, we assume are iid, which is obviously incorrect but adequate for our present purposes.

We can fit this model for each by partitioning the data and computing the MLEs, using a pool estimate of the variance:

We can then evaluate the profile log likelihood:

We that the peak is well determined.