11.5 Regression Splines

As we have seen, we can use polynomial basis functions to create nonlinear mappings, even though the model remains linear in parameters.

One issue is that polynomials are a global approximation to the function, we can achieve more flexibility by using a series of local approximations.

We will restrict ourselves to 1d to better understand the notion of locality. We can approximate the function using:

where is the th basis function.

A common way to define such basis functions is to use B-splines (”B” stands for basis and “splines” refers to a piece of flexible material used by artists to draw curves)

11.5.1 B-spline basis functions

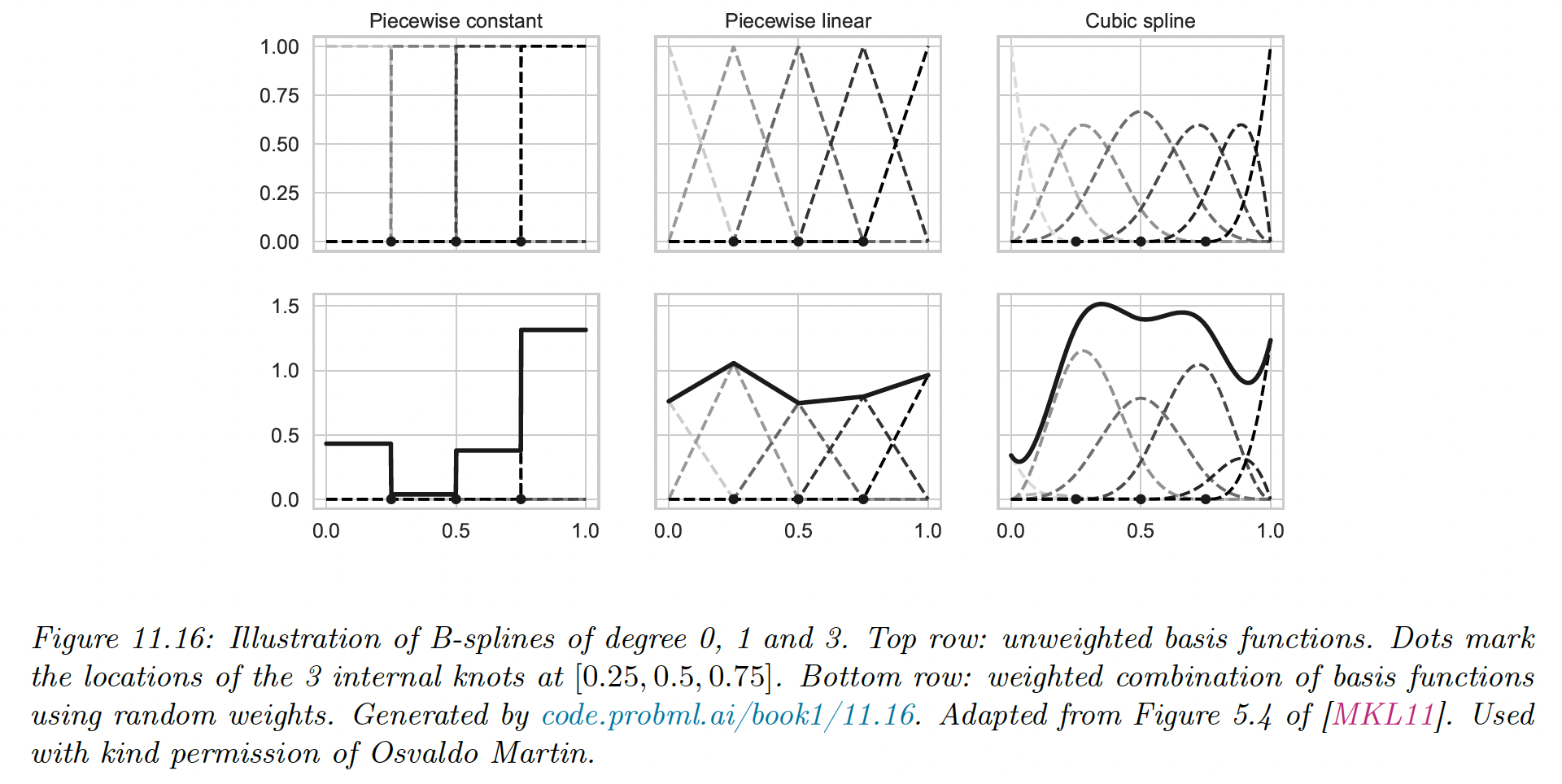

A spline is a piecewise polynomial of degree , where the locations of the pieces are defined by a set of knots .

The polynomial is defined on each of the intervals . The function has continuous derivatives of order at its knot points.

It is common to use cubic splines, where , ensuring the function has first and second-order derivatives at each knot.

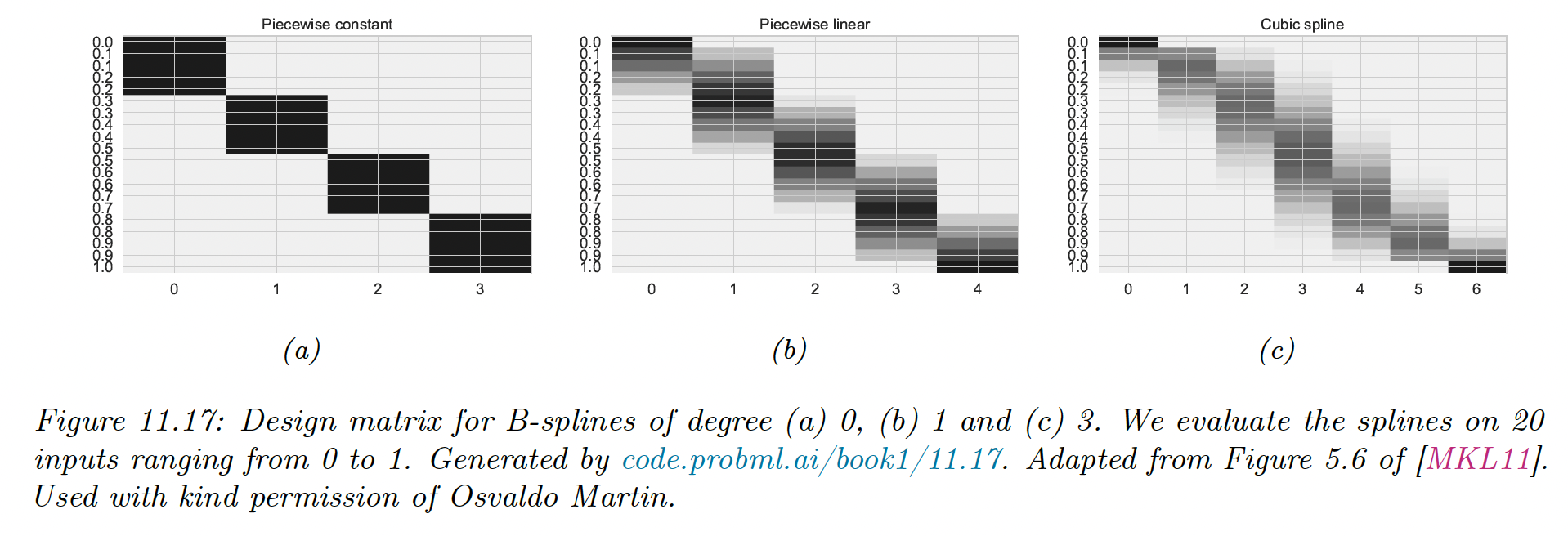

We don’t focus on the inner working of the splines, it suffices to say we can use scikit-learn to convert the matrix to , with the number of knots.

We see that for any given input, only basis functions are active (non-zero). For the piecewise constant function, , for linear , and for cubic .

11.5.2 Fitting a linear model using a spline basis

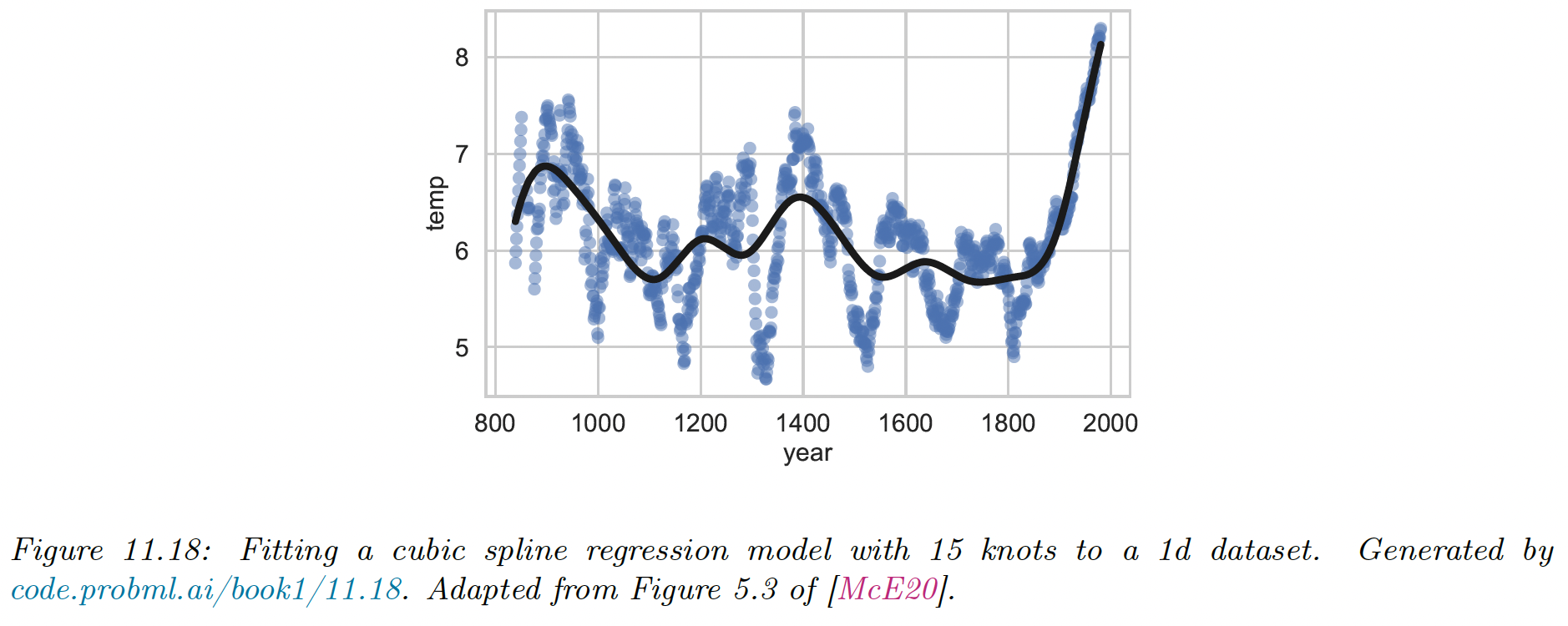

After computing the design matrix , we can use it to fit least squares (or ridge regression, having regularization is often better).

We use a dataset of temperature having a semi-periodic structure, a fit it using cubic splines. We chose 15 knots according to the quantiles of the data.

We see that the fit is reasonable, adding more knots would have resulted in a better fit, with a risk of overfitting, we can select these using model selection, like grid-search plus with cross-validation.

11.5.3 Smoothing splines

Smoothing splines are related to regression splines, but use knots, the number of datapoints. They are non-parametric methods since the number of parameters is not fixed a priori but grows with the size of the data.

To avoid overfitting, smoothing splines rely on regularization.

11.5.4 Generalized additive models (GAM)

A generalized additive models extend the notion of spline to -dimensional inputs, without interaction between features. It assumes a function of the form:

where each is a regression or smoothing spline.

This model can be fitted using backfitting, which iteratively fits each to the partial residuals generated by the other terms.

We can extend GAM beyond the regression case, e.g. to classification, by using a link function as in generalized linear models.