23.5 Deep Graph Embeddings

We now focus on graph in unsupervised and semi-supervised settings.

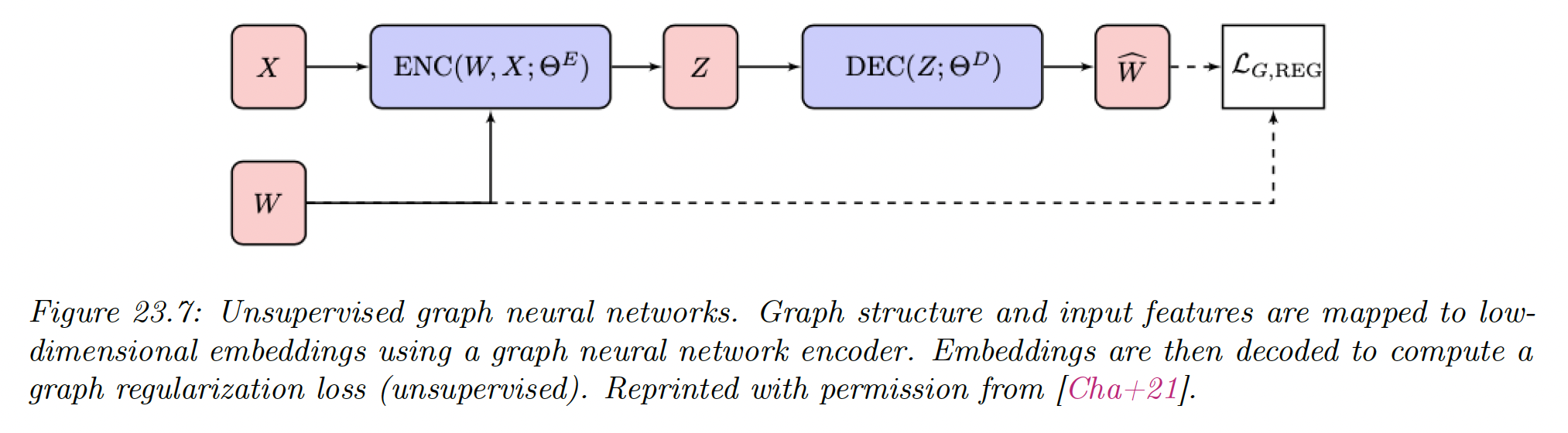

23.5.1 Unsupervised graph embeddings

23.5.1.1 Structural deep network embedding (SDNE)

SDNE method uses auto-encoders preserving first and second order node proximity.

It takes a row of the adjacency matrix as input and produces node embeddings . Note that this ignores node features .

The SDNE decoder returns , a reconstruction trained to recover the original graph adjacency matrix.

SDNE preserves second order proximity by minimizing the loss:

The first term is similar to matrix factorization techniques, except is not computed using outer product. The second term is used by distance based shallow embedding methods.

23.5.1.2 (Variational) graph auto-encoders (GAE)

GAE use graph convolutions to learn node embeddings .

The decoder is an outer product

The graph reconstruction term is the sigmoid cross entropy between the true adjacency and the predicted edges similarity scores:

To avoid computing the regularization term over all possible nodes pairs, GAE uses negative sampling.

Whereas GAE is a deterministic model, the author also introduced variational graph auto-encoders (VGAE).

The embedding is modeled as a latent variable with a standard multivariate prior and a graph convolution is used as the amortized inference network .

The model is training by minimizing the corresponding negative ELBO:

23.5.1.3 Iterative generative modeling of graphs (Graphite)

The graphite model extends GAE and VGAE by introducing a more complex decoder, which iterate between pairwise decoding functions and graph convolutions:

where is the input of the encoder.

This process allows Graphite to learn more expressive decoders. Finally, similar to GAE, graphite can be deterministic or variational.

23.5.1.4 Methods based on contrastive loss

The deep graph infomax (DGI) method is a GAN-like method for creating graph-level embeddings.

Given one or more real (positive) graphs, each with its adjacency matrix and nodes features , this method creates fake (negative) adjacency matrices and their features .

DGI trains:

i) an encoder that processes both real and fake samples, giving:

ii) A (readout) graph pooling function

iii) A discriminator function which is trained to output

for nodes corresponding to given graph and fake graph .

The loss is:

where contains and the parameter of and .

In the first expectation, DGI samples from the real graphs. If only one graph is given, it could sample some subgraphs from it (connected components).

The second expectation sample fake graphs. In DGI, fake graphs use the real adjacency matrix but fakes features are row-wise random permutation of the real .

The encoder used in DGI is a GCN, though any GNN can be used.

The readout summarizes an entire (variable size) graph to a single (fixed-dimension) vector. DGI uses a row-wise mean, though other graph pooling might be used, e.g. ones aware of the adjacency.

The optimization of the loss maximize a lower-bound on the mutual information between the outputs of the encoder and the graph pooling function, i.e. between individual node representation and the graph representation.

Graphical Mutual Information (GMI) is a variant that maximizes the MI between the representation of a node and its neighbors, rather than the maximizing the MO of node information and the entire graph.

23.5.2 Semi-supervised graph embeddings

We now discuss semi-supervised losses for GNNs. We consider the simple special case in which we use a nonlinear encoder of the node features , but ignores the graph structure :

23.5.2.1 SemiEmb

Semi-supervised embeddings (SemiEmb) use an MLP for the encoder of . For the decoder, we can use a distance-based graph decoder:

where we can use the L1 or L2 norm.

SemiEmb regularizes intermediate layers in the network using the same regularizer as the label propagation loss:

23.5.2.2 Planetoid

Unsupervised skip-gram methods like DeepWalk and node2vec learn embeddings in a multi-step pipeline, where random walks are first generated from the graph and then used to learn embeddings.

These embeddings are likely not optimal for downstream classification tasks. The Planetoid method extends such random walk methods to leverage node label information during the embedding.

Planetoid first maps nodes to embeddings using a neural net (again ignoring graph structure):

The node embeddings capture structural information while the node embeddings capture feature information.

There are two variant of encoding for :

- A transductive version that directly learn as an embedding lookup

- An inductive model where is computed with parametric mappings that act on input features

The planetoid objective contains both a supervised loss and a graph regularization loss. This latter term measures the ability to predict context using nodes embeddings:

with and , with if is a positive pair and if is a negative pair.

The distribution under the expectation is defined through a sampling process.

The supervised loss in Planetoid is the NLL of predicting the correct labels:

where is a node’s index, and are computed using a neural net followed by a softmax activation, mapping to predicted labels.