21.5 Spectral clustering

Spectral clustering is an approach based on eigenvalue analysis of a pairwise similarity matrix. It uses the eigenvectors to derive feature vectors for each data point, which are then clustered using feature-based clustering methods like K-means.

21.5.1 Normalized cuts

We start by creating an undirected weighted graph , where each data point is a node, and the strength of the edge - is a measure of similarity.

We only connect a node to its nearest neighbors to ensure the graph is sparse, which speeds computation.

Our goal is to find clusters of similar point, i.e. a graph partition into disjoints sets of nodes so as to minimize the normalized cut:

where:

and where is the complement of , , is the total weight of set (the sum of the degree of its edges).

This splits the graph into clusters such that the nodes of each cluster are similar to each other but different from the rest.

We could formulate the Ncut problem by using , where iff the point belongs to the cluster .

Unfortunately, this is NP-hard. Below, we discuss a continuous relaxation of the problem based on eigenvectors that is easier to solve.

21.5.2 Eigenvectors of the graph Laplacian encode the clustering

The graph Laplacian is defined by:

where is the symmetric weight matrix of the graph and is the diagonal matrix containing the weight degree of each node, .

In practice, it is important to account for the normalized graph Laplacian, to account for the fact that some nodes are more highly connected than others. One way to do it is to create the symmetry matrix:

The algorithm is the following:

- Find the smallest eigenvectors of

- Stack them into a matrix

- Normalize each row to unit norm to make the matrix

- Cluster the matrix using K-means, then infer the partition of the original point

21.5.3 Example

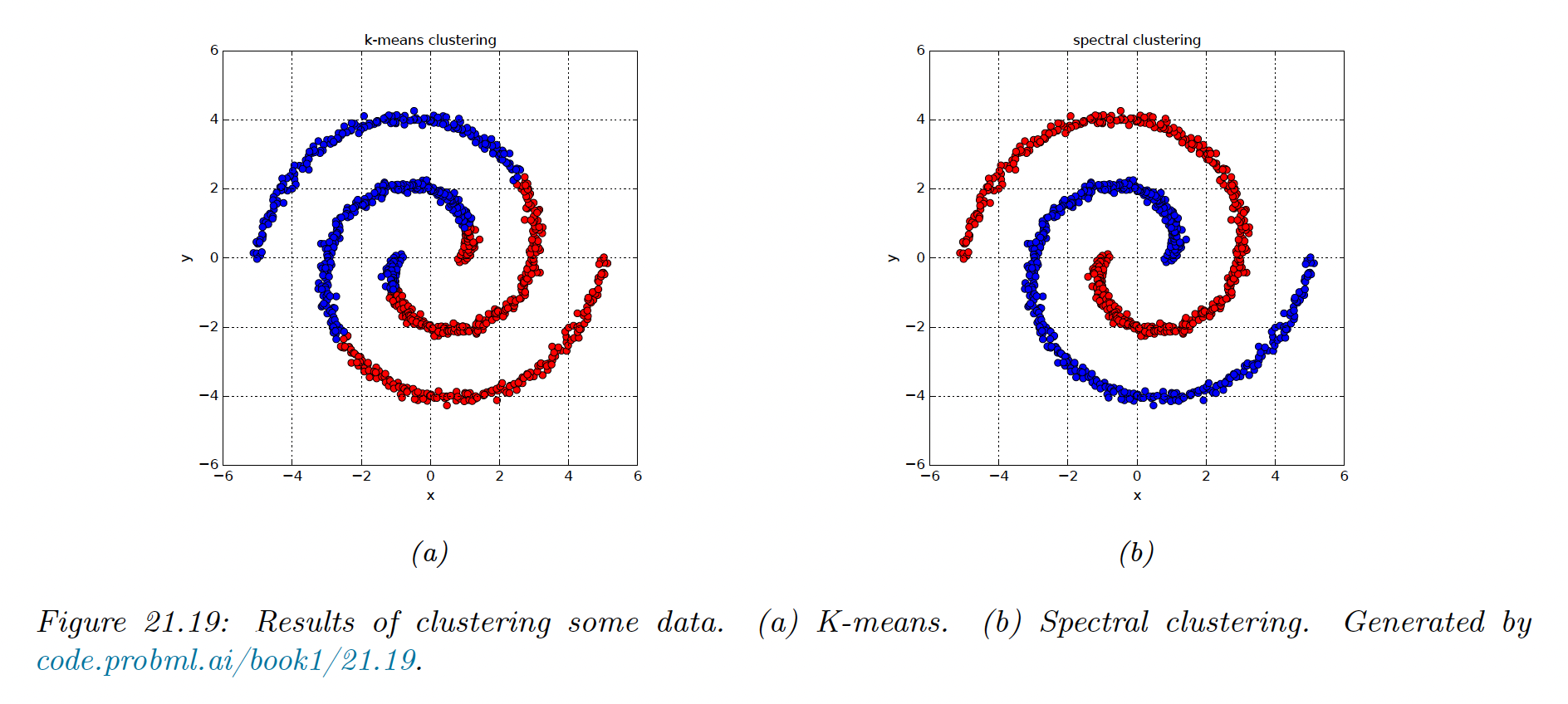

We see that for nested circles, K-means does a poor job, since it assumes each cluster corresponds to a spherical Gaussian.

Next, we compute a dense similarity matrix using a Gaussian kernel:

We then compute the first two eigenvectors of the normalized Laplacian . From this we infer the clustering using K-means with .

21.5.4 Connection with other methods

21.5.4.1 Spectral clustering and kPCA

Kernel PCA uses the largest eigenvectors of , which are equivalent to the smallest eigenvectors of .

In practice, spectral clustering tends to give better results than kPCA.