20.2 Factor analysis

PCA is a simple form of linear low-dimensional representation of the data.

Factor analysis is a generalization of PCA based on probabilistic model, so that we can treat it as building block for more complex models such as mixture of FA models or nonlinear FA models.

20.2.1 Generative model

Factor analysis is a linear-Gaussian latent variable generative model:

where is known as the factor loading matrix and is the covariance matrix.

FA can be thought as a low-rank version of a Gaussian distribution. To see this, note that the following induced marginal distribution is Gaussian:

Without loss of generality, we set and .

We get:

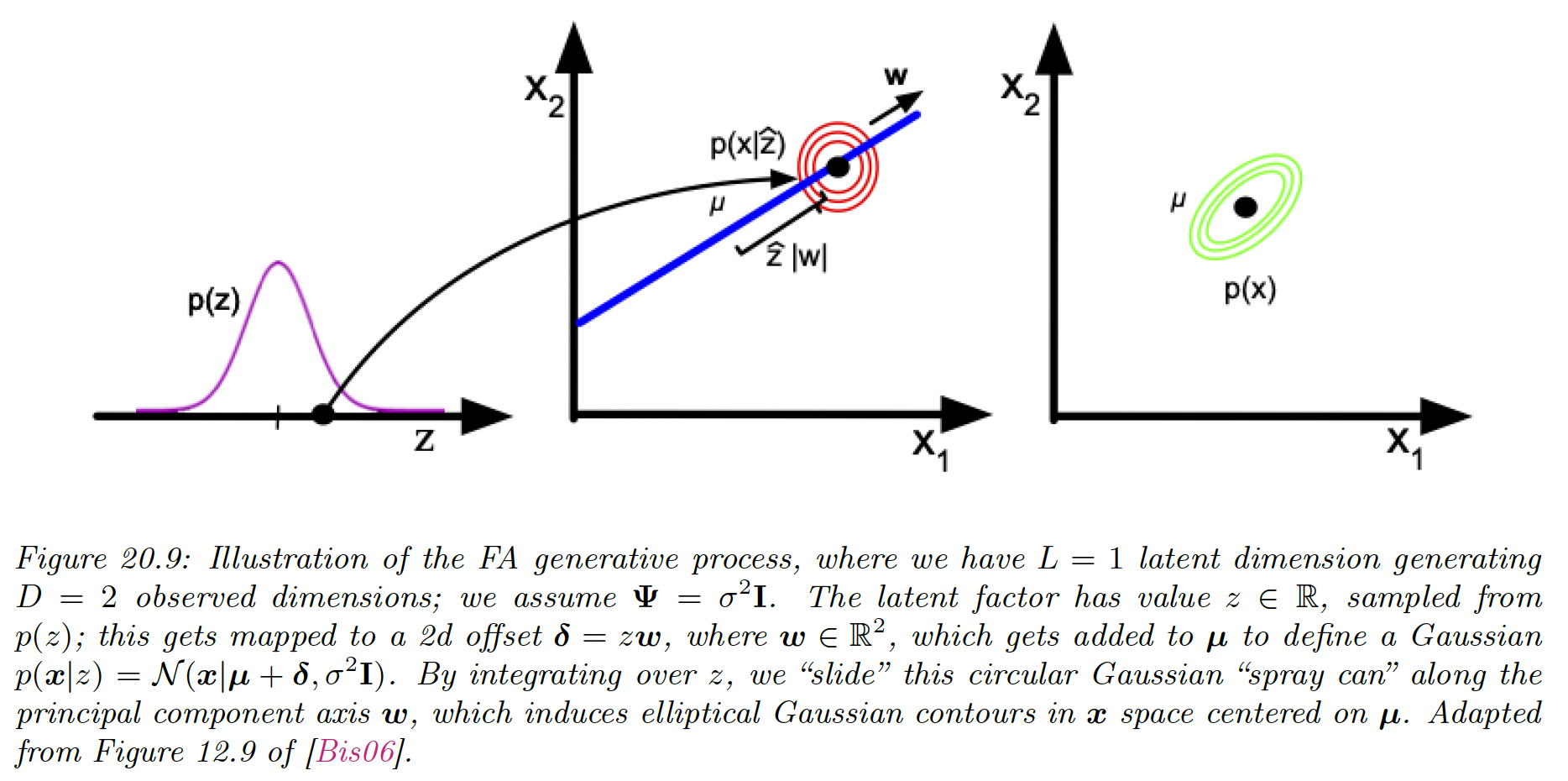

Suppose we use and and .

We can see it as taking an isotropic Gaussian “spray can” representing the likelihood , and sliding it along the 1d line defined by as we vary the 1d latent prior .

In general, FA approximates the low rank decomposition of the visible covariance matrix:

This only uses parameters, which allows a flexible compromise between a full covariance Gaussian with parameters, and a diagonal covariance with parameters.

From this equation, we see that we should restrict to be diagonal, otherwise we could set , ignoring latent factors while still being able to model any covariance.

The marginal variance of each visible variable is:

where the first term is the variance due to the common factors, and the uniqueness is the variance specific to that dimension.

We can estimate the parameters of a FA using EM. Once we have fit the model, we can compute probabilistic latent embeddings using .

Using Bayes rule for Gaussians, we have:

20.2.2 Probabilistic PCA

We consider a special case when of the factor analysis in which has orthonormal columns, \Psi=\sigma^2I$$\mu=\bold{0}. This is called probabilistic PCA or sensible PCA.

The marginal distribution on the visible variables has the form:

where:

The log likelihood is:

where we plugged , the MLE of , and used the trace trick on the empirical covariance matrix

It has been shown that the maximum of this objective must satisfy:

where are the eigenvectors associated to the largest eigenvalues of , and is the diagonal eigenvalues matrix.

In the noise free limit, where , we get:

which is proportional to the PCA solution.

The MLE for the observation variance is:

which is the average distortion associated with the discarded dimensions.

20.2.4 Unidentifiability of the parameters

The parameters of a FA model are unidentifiable. To see this, consider a model with weight , where is an arbitrary orthogonal rotation matrix, satisfying .

This has the same likelihood as model with weight , since:

Geometrically, multiplying by an orthogonal matrix is like rotating before generating ; but since is drawn from an anisotropic Gaussian distribution, it makes no difference on the likelihood.

Consequently, we can’t uniquely identify and the latent factors either.

To break this symmetry, several solutions have been proposed:

- Forcing to have orthonormal columns. This is the approach adopted by PCA.

- Forcing to be lower triangular.

- Sparsity promoting priors on the weight, by using regularization on .

- Non gaussian prior .

20.2.5 Nonlinear factor analysis

The FA model assumes the observed data can be modeled with a linear mapping from a low-dimensional set of Gaussian factors.

One way to relax such assumption is to let the mapping from to be a nonlinear model, such as a neural network. The model becomes:

This is called nonlinear factor analysis.

Unfortunately, we can’t compute the posterior or MLE exactly. Variational autoencoders is the most common way to approximate nonlinear FA model.

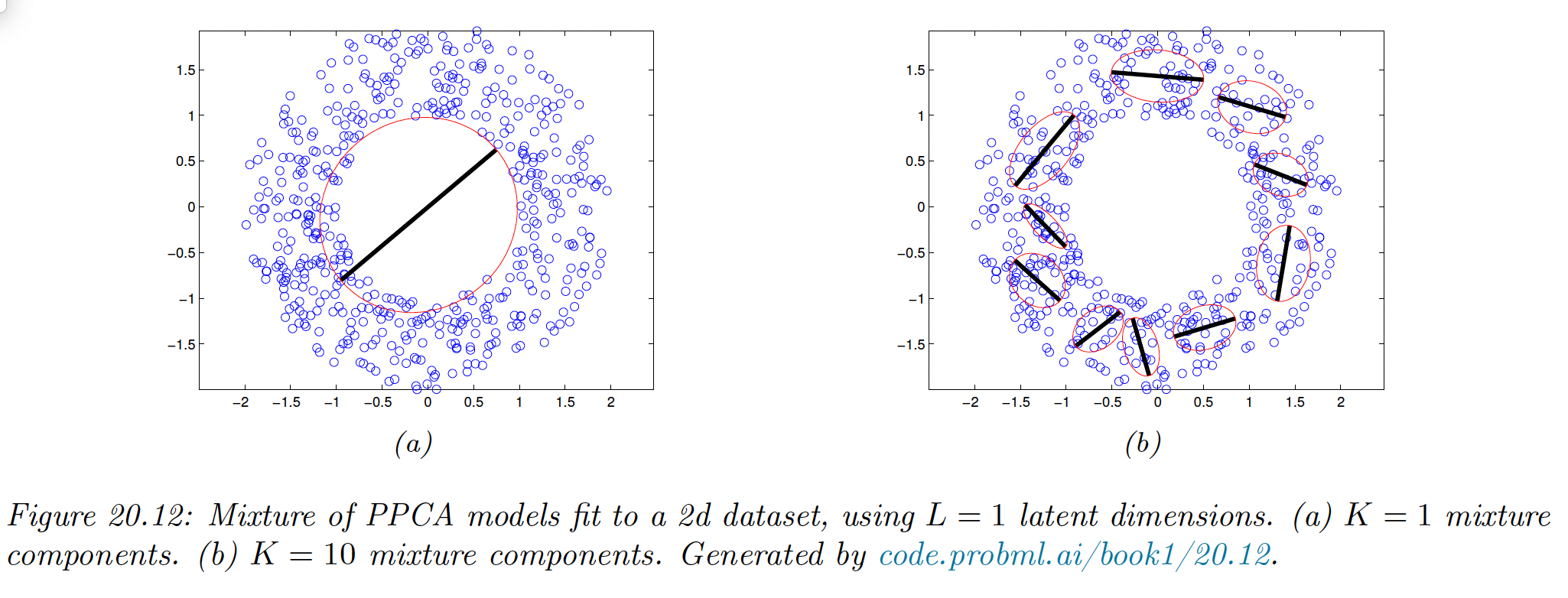

20.2.6 Mixture of factor analysers

One other way to relax the assumption made by FA is assuming the model is only locally linear, so the overall model becomes a weighted combination of FA models. This is called a mixture of FA (MFA).

The overall model for the data is a mixture of linear manifolds, which can be used to approximate the overall curved manifold.

Let be the latent indicator which specify which subspace we should use to generate the data.

If , we sample from a Gaussian prior and pass it through the matrix and add noise.

The distribution in the visible space is:

In the special case of we get a mixture of PPCA (although it is diffucilt to ensure orthogonality of the in this case).