17.1 Mercer Kernels

A Mercer kernel or positive definite kernel is a symmetric function

Given a set of data points, we define the Gram matrix as the following similarity matrix:

is a Mercer kernel iff the Gram matrix is positive definite for any set of distinct input

The most widely used kernel is the RBF kernel:

Here corresponds is the bandwidth parameter i.e. the distance over which we expect differences to matter.

The RBF measures similarity between two vectors in using scaled Euclidean distance.

17.1.1 Mercer’s theorem

Any positive definite matrix can be represented using an eigendecomposition of the form where is the diagonal matrix of eigenvalues and is a matrix containing the eigenvectors.

Now consider the element of :

where is the th column of .

If we define we can write:

The entries of the kernel matrix can be computed by performing an inner product of some feature vectors that are implicitly defined by the eigenvectors of the kernel matrix.

This idea can be generalized to apply to kernel function, not just kernel matrices. For example, consider the quadratic kernel . In 2d, we have:

We can write this:

where .

So, we embedded the 2d input into a 3d feature space .

The feature representation of the RBF kernel is infinite dimensional. However, by working with kernel functions, we can avoid having to deal with infinite dimensional vectors.

17.1.2 Popular Mercer kernels

17.1.2.1 Stationary kernels for real-valued vectors

For real-valued inputs, , it is common to use stationary kernels, of the form:

The value of the output only depends on the distance between the inputs. The RBF kernel is a stationary kernel. We give some examples below.

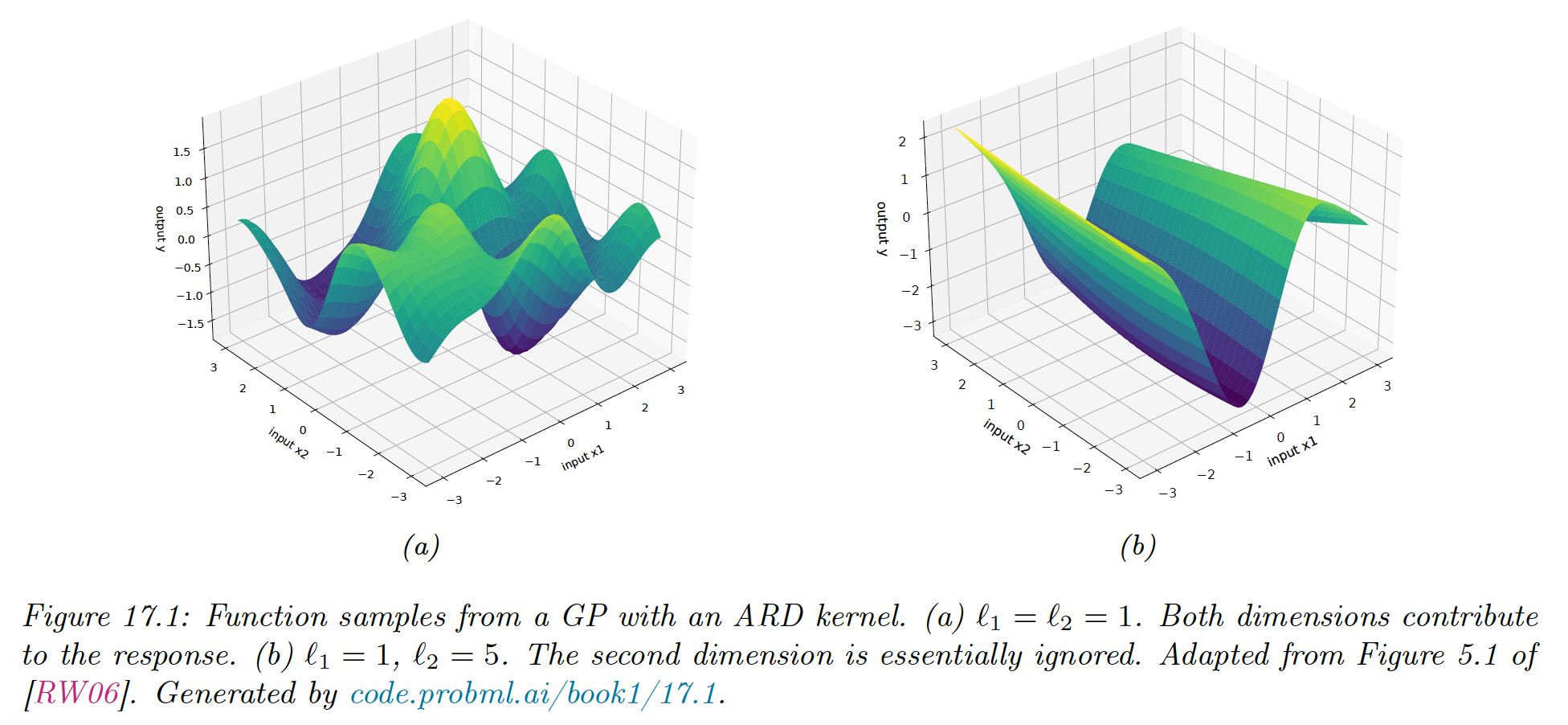

ARD Kernel

We can generalize the RBF kernel by replacing the Euclidean distance with the Mahalanobis distance:

where .

If is diagonal, this can be written:

where:

We can interpret as the total variance and as defining the characteristic length scale of dimension . If is an irrelevant input dimension, we can set , so the corresponding dimension will be ignore.

This is known as automatic relevancy determination (ARD), hence this kernel is called ARD kernel.

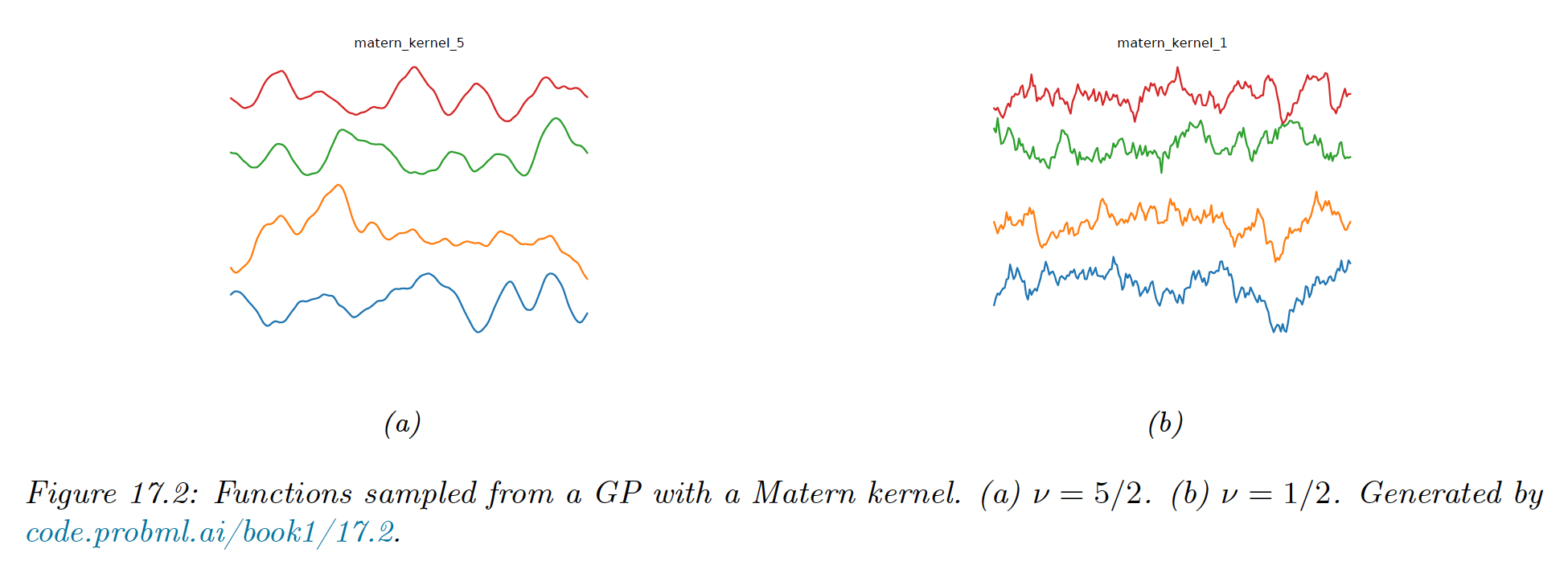

Matern kernels

The SE kernel yields functions that are infinitely differentiable, and therefore very smooth. For many applications, it is better to use the Matern kernel which yields rougher functions.

This allow to model small “wiggles” without having to make the overall length scale very small.

The Matern kernel has the following form:

where is a modified Bessel function and the length scale.

Functions sample from this GP are -times differentiable, where . As , this approaches the SE kernel.

For value , the function simplifies as follow:

This corresponds to the Ornstein-Uhlenbeck process which describe the velocity of a particle undergoing Brownian motion. The corresponding function is continuous but very jagged.

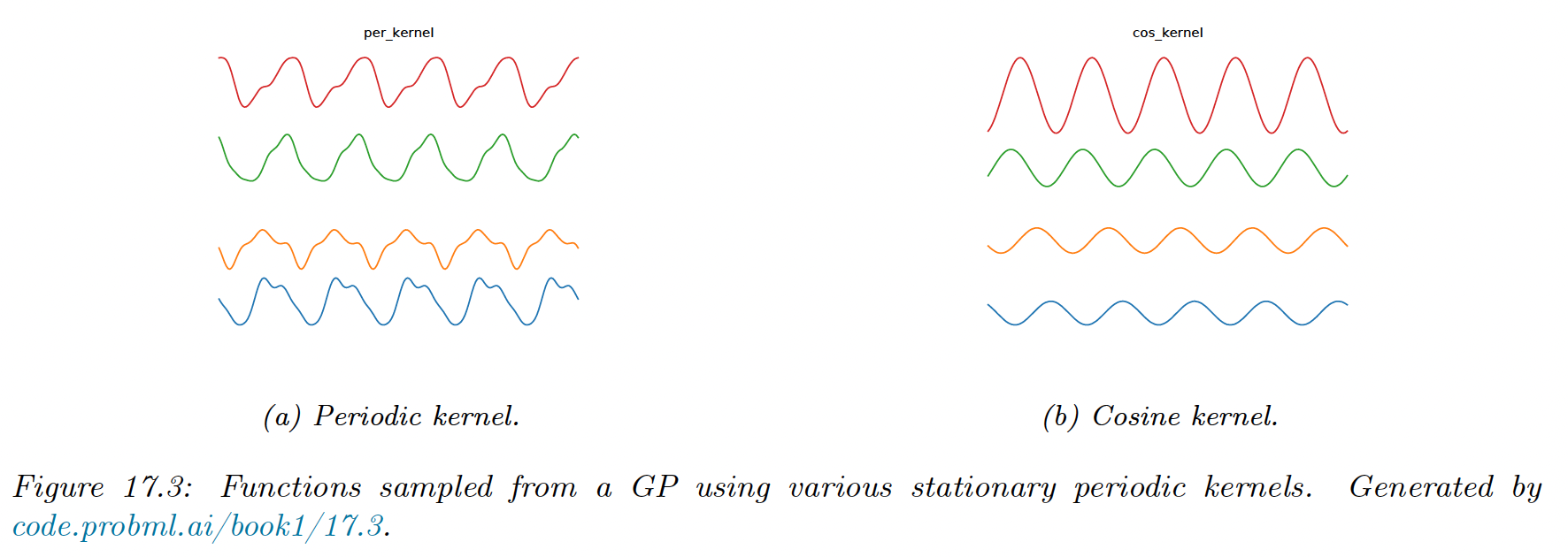

Periodic kernels

The periodic kernel capture repeating structure, and has the form:

where is the period.

This is related to the cosine kernel:

17.1.2.2 Making new kernels from old

Given a valid kernel , we can create new kernel using any of the following methods:

, for any function

, for any function polynomial with nonneg coef

, for any psd matrix

For example, suppose we start with the linear kernel .

We know this is a valid Mercer kernel since the Gram matrix is the (scaled) covariance matrix of the data.

From the above rules we see that the polynomial kernel is also a valid kernel, and contains all monomial of order

We can also infer that the Gaussian kernel is a valid kernel. We can see this by noting:

Hence:

We scale by a constant, then use the exponential, then symmetrically multiply by functions of and .

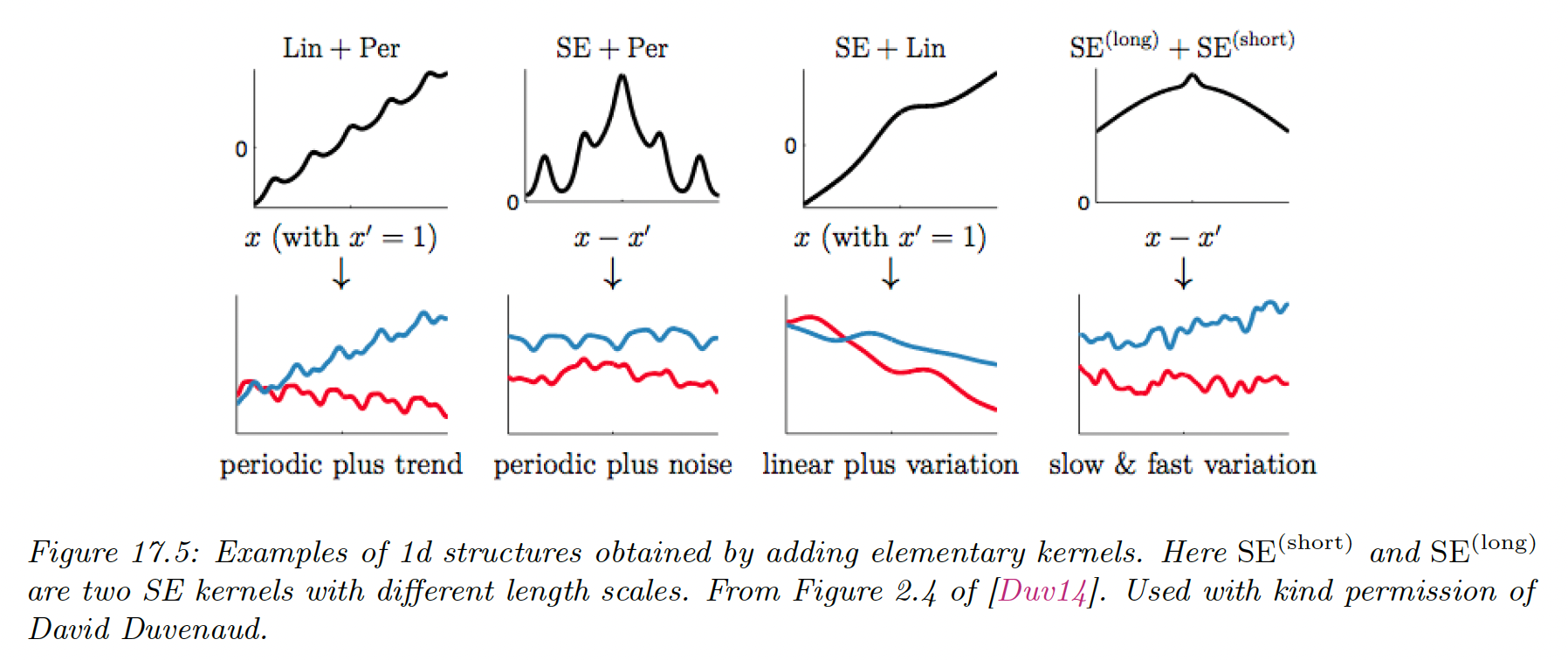

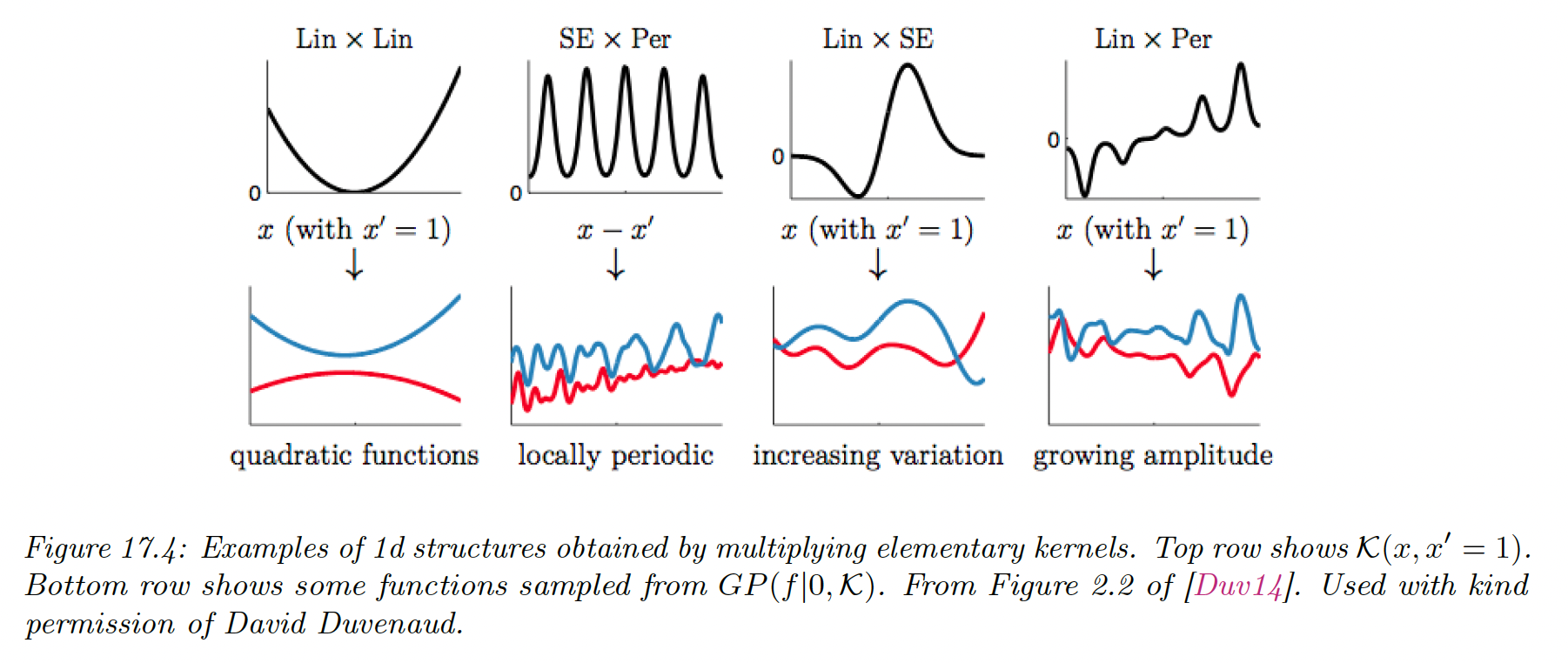

17.1.2.3 Combining kernels by addition and multiplication

We can also combine kernels using addition or multiplication:

Adding (resp. multiplying) positive-definite kernels together always result in another positive definite kernel. This is a way to get a disjunction (resp. conjunction) of the individual properties of each kernel.

17.1.2.4 Kernels for structured inputs

Kernels are particularly useful for structured inputs, since it’s often hard to “featurize” variable-size inputs.

We can define a string kernel by comparing strings in terms of the number of ngrams they have in common.

We can also define kernel on graph. For example random walk kernel perform random walk simultaneously on two graphs and count the number of paths in common.