17.2 Gaussian processes

Gaussian processes is a way to define distributions over functions of the form , where is any domain.

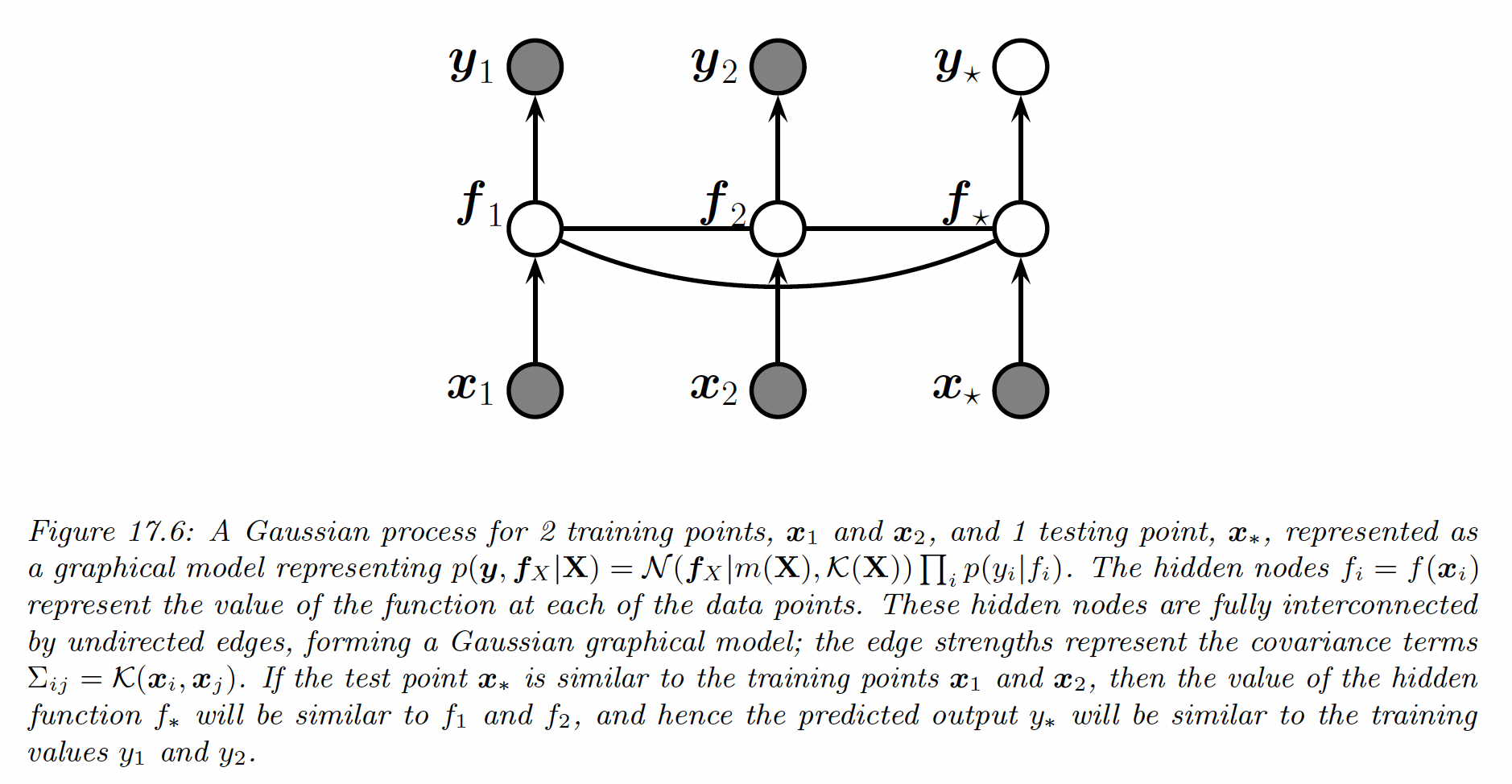

The key assumption is that the function values at a set of inputs, , is jointly Gaussian, with mean and covariance , where is a mean function and is a Mercer kernel.

This assumption holds when , containing training points and test point .

Thus, we can infer from knowledge of by manipulating the joint Gaussian distribution .

We can also extend this to work with noisy observations, such as in regression or classification problems.

17.2.1 Noise-free observations

Suppose we observe a training set , where is the noise-free function at .

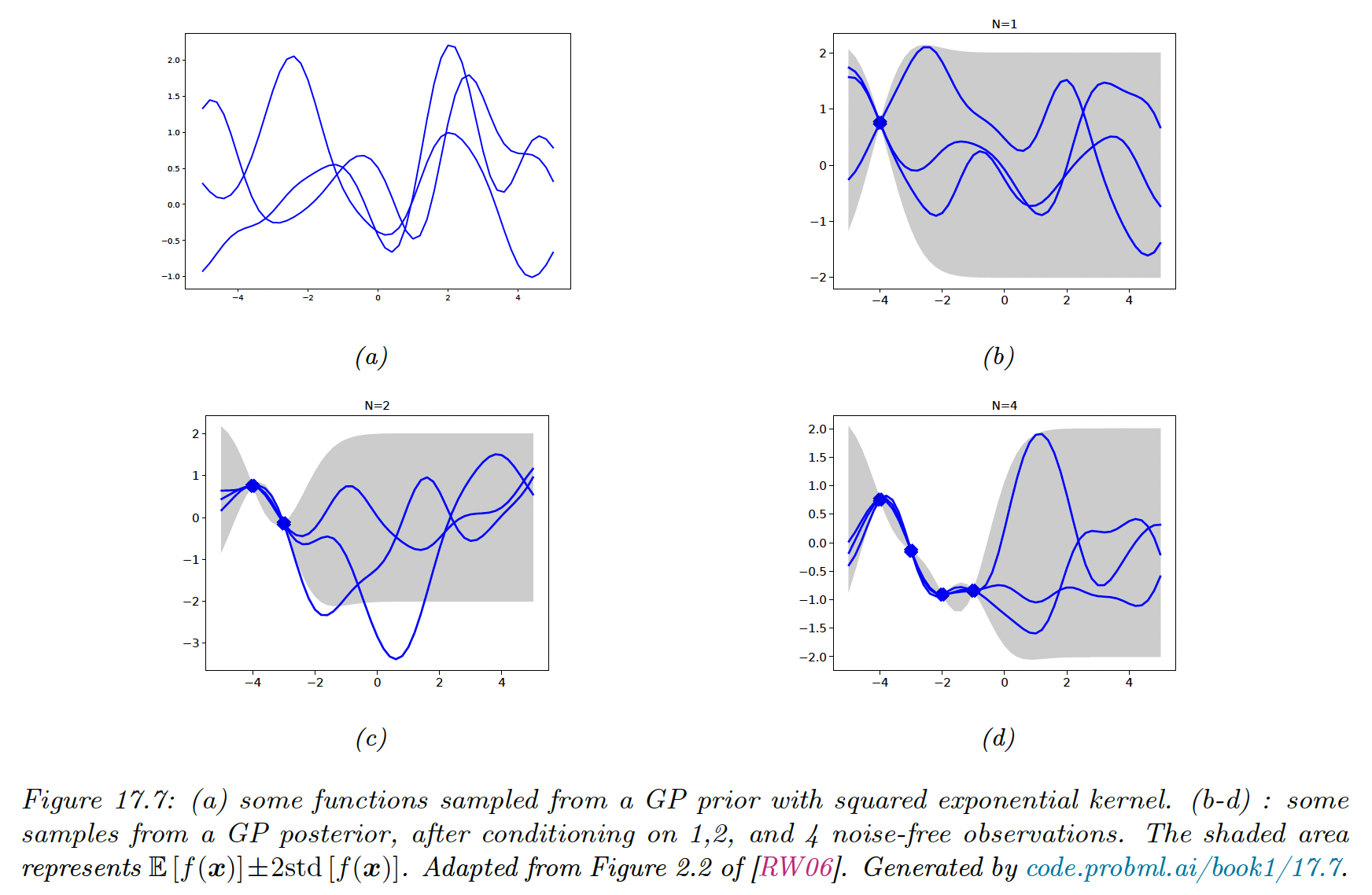

We want the GP to act as an interpolator of the training data, i.e. returning the answer with no uncertainty when it has already seen .

Let’s now consider a test set of size (not in ). We want to predict the function outputs .

By definition of the GP, the joint distribution has the form:

where , , and is .

By the standard rule for conditioning Gaussians, the posterior distribution is:

We see the uncertainty increase as we move further away from the training points.

17.2.2 Noisy observations

Let’s now consider the case where we observe a noisy version of the underlying function:

where .

In this case, the model is not required to interpolate the training data, but it must come “close”.

The covariance of the noisy response is:

In other words:

The density of the observed data and the latent, noise-free function on the test points is:

Hence the posterior predictive density at a set of points is:

In the case of a single test point , this simplifies as follow:

where and .

If the mean function is zero, we can write the posterior mean as:

This is identical to the predictions from kernel ridge regression.

17.2.3 Comparison to kernel regression

In section 16.3.5 we discussed kernel regression, which is a generative approach to regression in which we approximate using a density kernel estimation.

This is very similar to our previous posterior mean , we few important differences:

- In a GP, we use Mercer kernel instead of density kernel. Mercer kernels can be defined on objects such as string or graphs, which is harder to do for density kernels.

- GP is an interpolator (when ) , so . By contrast, kernel regression is not an interpolator..

- GP is a Bayesian model, meaning we can estimate hyperparameters of the kernel by maximizing the marginal likelihood. By contrast, in kernel regression we must use cross-validation to estimate the kernel parameters, such as bandwidth.

- Computing the weights in kernel regression takes times, whereas computing the weigths for GP regression takes time (although there are approximations that can reduce it to

Also note that Bayesian linear regression is a special case of a GP. The former works in weight space whereas the latter works in function space.

17.2.5 Numerical issues

For notational simplicity, we assume the prior mean is zero, .

The posterior mean is . For reason of computation stability, it is unwise to compute directly .

Instead we can use a Cholesky decomposition which takes time. We then compute , where we have used the backlash operator to represent back-substitution.

Given this, we can compute the posterior mean for each test case in time using:

We can also compute the variance using:

where

Finally, the log marginal likelihood can be computed as:

17.2.6 Estimating the kernel

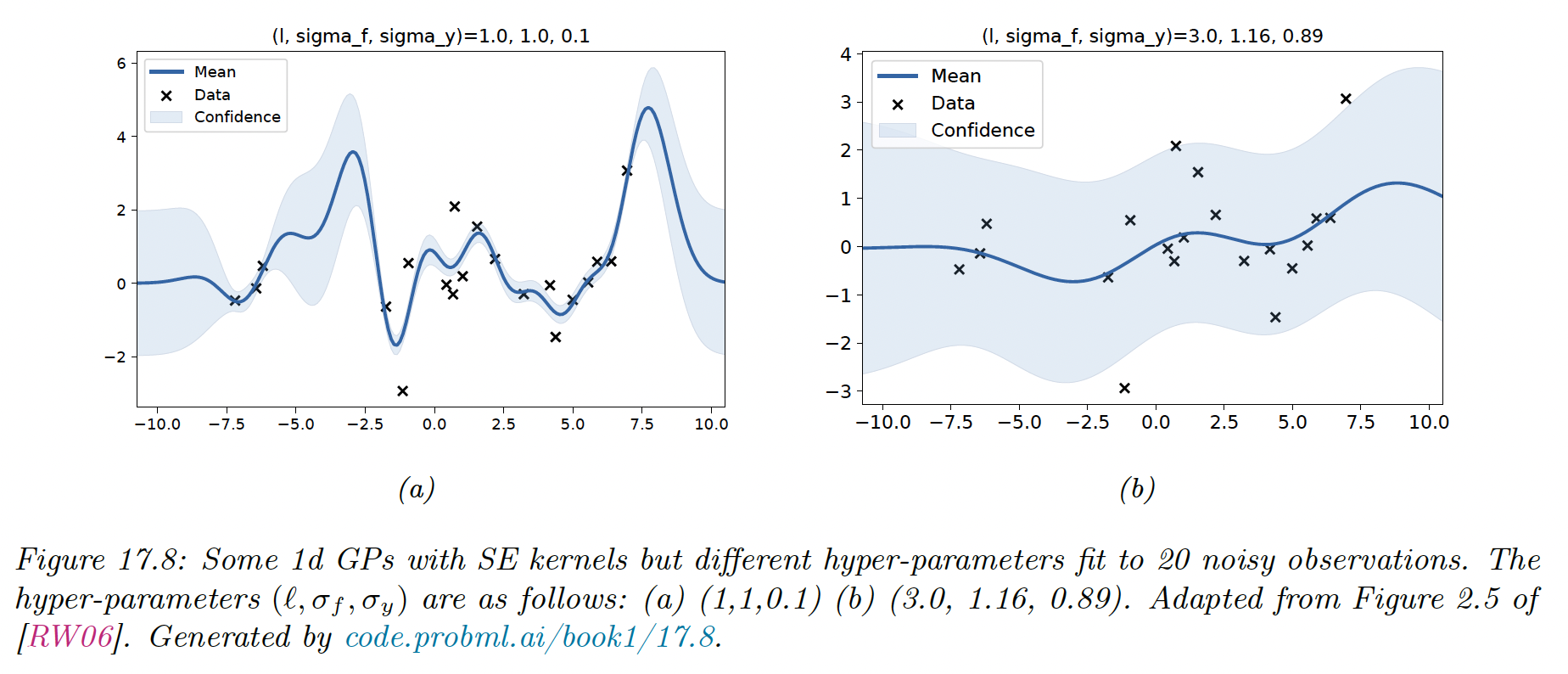

Most models have some free parameters that can play a great role on the predictions.

Let assume we perform a 1d regression using GP with a RBF kernel:

is the horizontal scale over which the function changes, control the vertical scale. We assume observation noise with variance .

We sampled 20 points from an MVN with covariance given by for a grid of points , and added observation noise of value

We then fit these points using a GP with the same kernel, but with a range of hyperparameters.

When changing from 1 to 3, the function goes from a good fit to very smooth.

17.2.6.1 Empirical Bayes

To estimate the kernel parameters (aka hyperparameters), we could use exhaustive grid search with the validation loss as an objective, but this can be slow (this is the approach used by nonprobabilistic methods such as SVMs).

We consider here the empirical Bayes approach, which use gradient-based approach, which are faster. We maximize the marginal likelihood (and not likelihood, because we marginalized out the latent Gaussian vector ):

Assuming the mean is 0, we have:

So the marginal likelihood is:

with the dependence of on implicit.

The first term is a data fit term, the second is a model complexity term (the third is just a constant).

If the length scale is small, the fit will be rather good so will be small. However, will be almost diagonal since most points will not be considered “near” any others, so will be large.

We now maximize the marginal likelihood:

where

It takes time to compute , and then time per hyperparameter to compute the gradient.

The form of depends on the form of the kernel, and which parameters we are taking derivatives with respect to. We often have constraints on the HP such as . In this case, we can define and then use the chain rule.

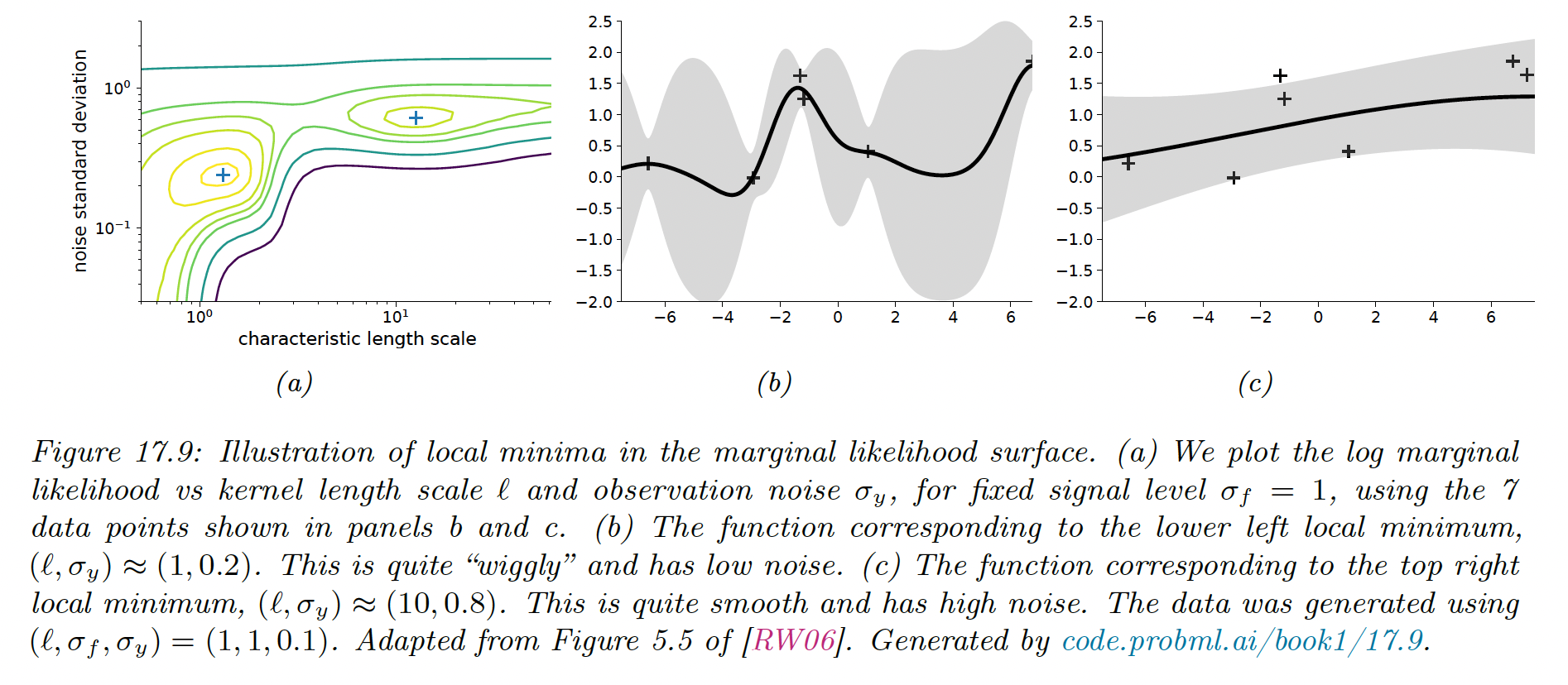

Given an expression for the log marginal likelihood and its derivative, we can estimate the kernel parameters with any gradient-based optimizer. However, since the objective is not convex, local minima can be a problem so we need multiple restart.

Notice that when the noise is high (around ), the marginal likelihood is insensitive to the length scale. Similarly, when the length scale is very short , the kernel interpolates exactly the data.

17.2.6.2 Bayesian inference

When we have a small number of data points (e.g. using GP for Bayesian optimization) using a point estimate of the kernel parameters can give poor results.

Instead, we can approximate the posterior distribution over the parameters, using slice sampling, Hamiltonian Monte Carlo or sequential Monte Carlo.

17.2.7 GPs for classification

So far, we have used GPs for regression, which uses Gaussian likelihood. In this case, the posterior is also a GP and all the computation can be performed analytically.

However, if the likelihood is non Gaussian, such as the Bernoulli likelihood for binary classification, we can no longer compute the posterior exactly.

There are various approximation we can make, and we use there the Hamiltonian Monte Carlo method, both for the latent Gaussian and the kernel parameters .

We specify the negative log joint:

We then use autograd to compute and , and use these gradients as input to a Gaussian proposal distribution.

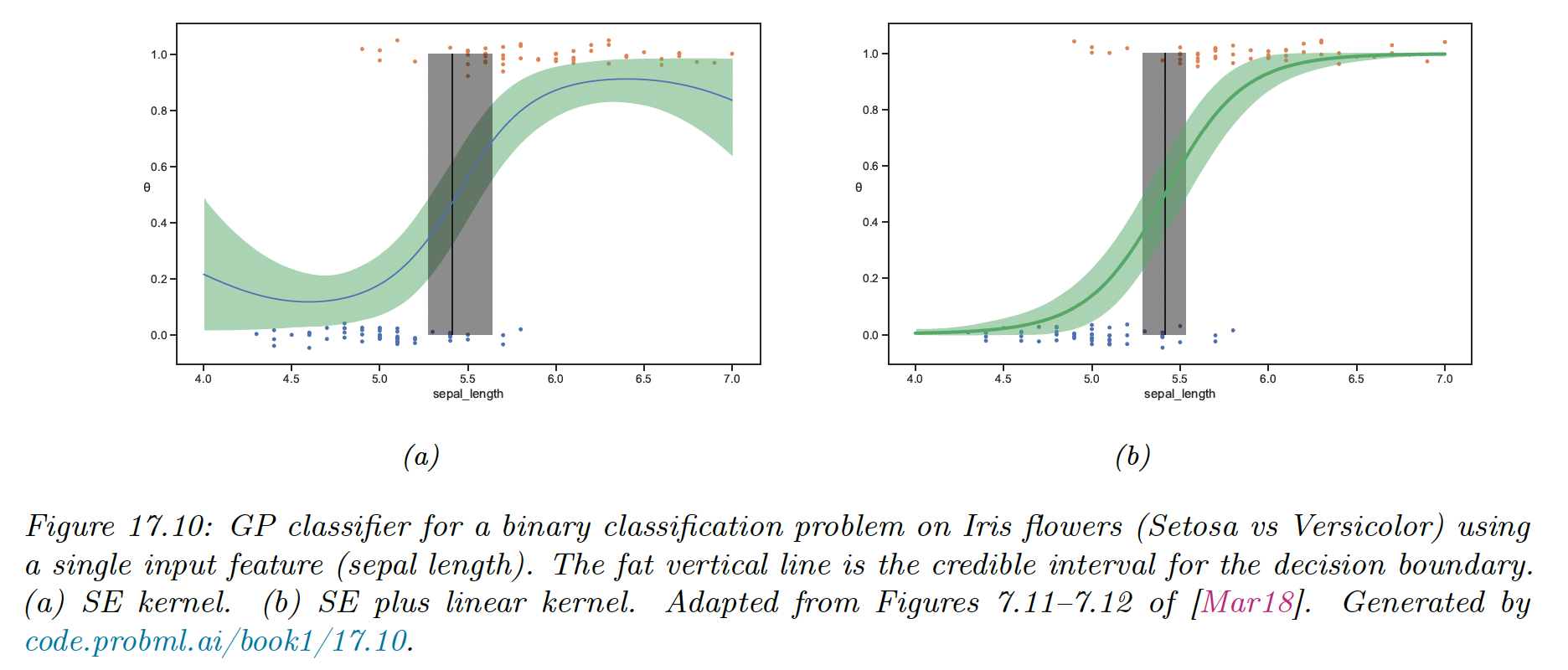

We consider a 1d example of binary classification:

The SE kernel curves probability toward 0.5 on the edges, because the prior mean function has and .

We can eliminate this artifact by using a more flexible kernel that encodes the knowledge that the probability is monotonically increasing or decreasing. We can use a linear kernel, scale it and add it to the SE kernel:

17.2.9 Scaling DPs to large datasets

The main issue with GPs (and kernel methods such as SVM) is that it requires inverting the kernel matrix, which takes times, making the method too slow for big datasets.

We review some speed-up techniques.

17.2.9.1 Sparse (inducing-point) approximations

A simple approach to speeding up GPs is to use less data. A better approach is to summarize the training points into inducing points or pseudo inputs .

This lets us replace with where is the vector of observed function values at training points.

By estimating we can learn to compress the training data and speed up the computation to . This is called a sparse GP. This whole process can be performed using the framework of variational inference.

17.2.9.2 Exploiting parallelization and kernel matrix structure

It takes to compute the Cholesky decomposition of , which is needed to solve the linear system and compute .

An alternative is to use linear algebra methods, often called Krylov subspace methods, which are based on matrix vector multiplication (MVM). These approaches are usually faster because they exploit structure in .

Moreover, even if the Kernel matrix doesn’t have a special structure, matrix multiplication can easily be parallelized over GPUs, unlike Cholesky based methods which are sequential.

This is the basis of the GPyTorch package.

17.2.9.3 Random feature approximation

We can approximate the feature map for many shift invariant kernels using a randomly chosen finite set of basis functions, thus reducing the cost to .

i) Random features for RBF kernel

For Gaussian RBF kernel, one can show that:

where the features vector are:

where and is a random Gaussian matrix, where the entries are sampled iid from where is the kernel bandwidth.

The bias of the approximation decreases as increases. In practice, we compute a single sample Monte Carlo approximation to the expectation by drawing a single random matrix.

The features in the equation above are called random Fourier features (RFF).

We can also use positive random features instead of trigonometric random features, which can be preferable for some applications, like models using attention. We can use:

Regardless of the choice of the features, we can obtain a lower variance estimate by ensuring the rows of are random but orthogonal. These are called orthogonal random features.

Such sampling can be conducted efficiently via Gram-Schmidt orthogonalization of the unstructured Gaussian matrices.

ii) Fastfood approximation

Storing the random matrix takes space and computing takes time, where is the input dimensionality and is the number of random features.

This can become prohibitive when , which it may needs to get any benefits over using the original set of features.

Fortunately, we can use the fast Hadamard transform to reduce the memory to and reduce the time to . This approach has been called fastfood as reference to the original term “kitchen sinks”.

iii) Extreme learning machines

We can use the random features approximation to the kernel to convert a GP into a linear model of the form:

where for RBF kernels. This is equivalent to a MLP with fixed and random input-to-hidden weights.

When , this corresponds to an over-parametrized model which can perfectly interpolate the training data.

Alternatively, we can use , but stack many nonlinear random layers together and just optimize the output weights. This has been called an extreme learning machine (ELM).